Introduction

Artificial Intelligence (AI) is undevelopment at a breakneck speed. In recent years, large-scale sound models (LLMs) such as OpenAI’s GPT series and Meta’s LLaMA have radically transformed workflows, Education, research, and automated media. However, the underlying infrastructure to deploy these dense, all-parameter models requires colossal computational resources — GPUs, TPUs, and distributed clusters — making it inaccessible for many businesses, developers, and research institutions. Enter DeepSeek‑MoE, a novel and highly efficient AI paradigm. DeepSeek‑MoE leverages the Mixture-of-Experts (MoE) approach, activating only relevant subsets of its model for each input rather than the entirety of its neural parameters. This selective activation drastically reduces computational costs while enhancing performance in domain-specific tasks.

- What DeepSeek‑MoE is and how it differs from dense LLMs

- The underlying mechanics of Mixture-of-Experts architectures

- Architectural innovations unique to DeepSeek‑MoE

- Benchmark performance, GPU efficiency, and cost optimization

- Real-world use cases in enterprises, specialized domains, and education

- Deployment strategies, challenges, and the future trajectory of MoE models

What is a Mixture-of-Experts (MoE) Model?

The Fundamentals Explained in Plain Terms

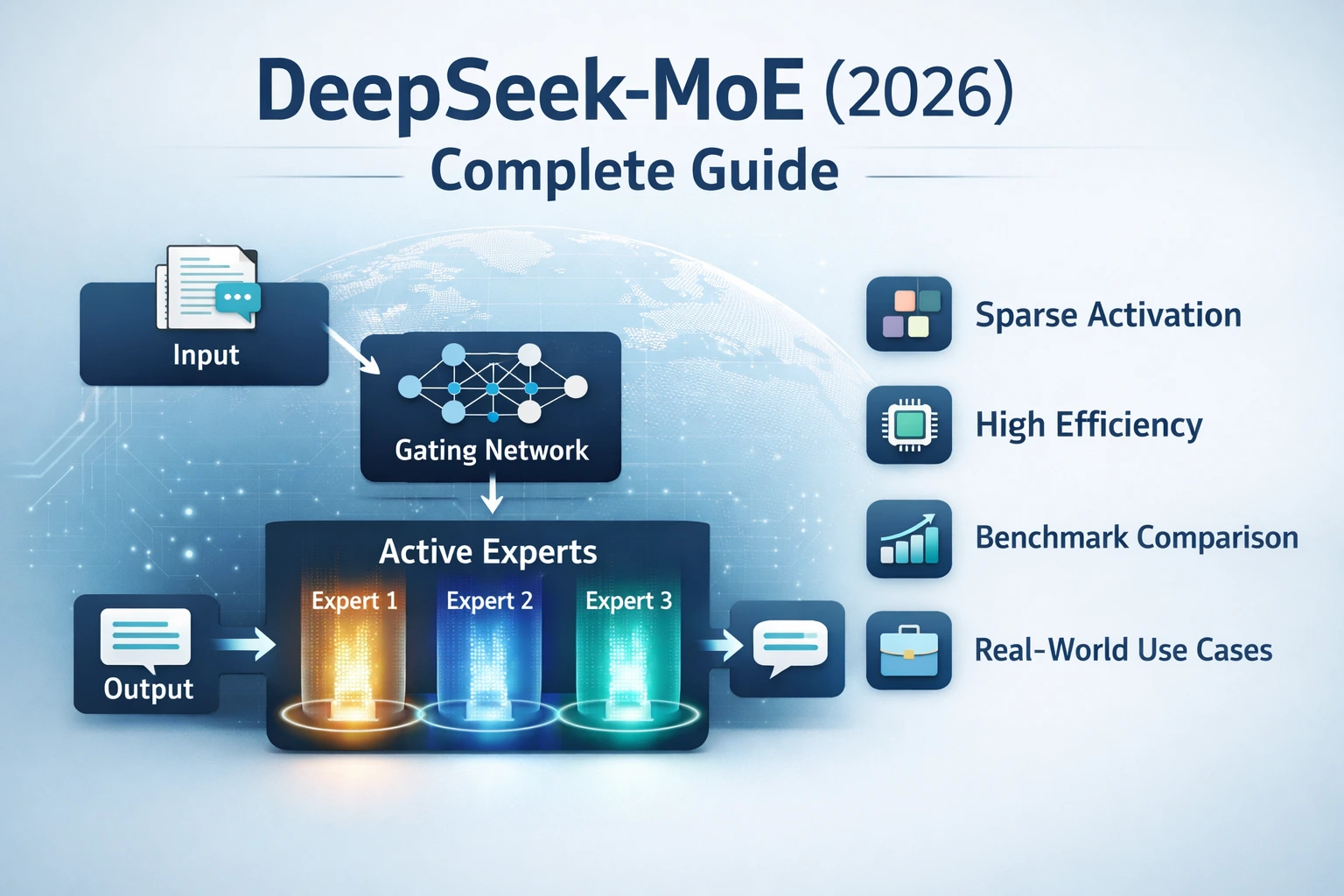

Traditional AI architectures are “dense”: every neuron and parameter in the model participates in processing every input. While this approach ensures consistency, it is computationally inefficient and resource-intensive. A Mixture-of-Experts (MoE) architecture adopts a different philosophy. Rather than activating the entire model, it comprises multiple “expert” subnetworks, and only a subset of these experts is triggered for any given input. This selective mechanism reduces redundancy, improves efficiency, and allows for scalable growth without linear increases in compute costs. Think of it like consulting a panel of domain specialists: if you have a legal question, you don’t ask the mechanical engineer — you consult the lawyer. The AI dynamically mimics this selective expertise allocation.

Core Principles of MoE

Sparse Activation

Sparse activation refers to the strategy of activating only a small fraction of experts per input token, leaving most subnetworks idle. This concept is central to MoE efficiency, as compute expenditure scales with active experts, not total model size.

Gating Networks

A gating mechanism — typically a trainable neural submodule — decides which experts should process the input. The gate evaluates the token or sentence, assigns probability scores to each expert, and routes the input accordingly.

Expert Specialization

- Finance and accounting

- Legal reasoning

- Technical code synthesis

- Medical terminology

Scalability

Because only a fraction of experts are active at any time, MoE models can scale to hundreds of billions of parameters while maintaining manageable computational costs. This opens the door to ultra-large AI systems that would otherwise be infeasible.

DeepSeek‑MoE Architecture: A Technical Deep Dive

Normalized Sigmoid Gating

- Balanced expert utilization

- No expert is overworked or underutilized

- Smooth gradient flow during backpropagation

Shared Expert Groups

Certain experts remain always active, handling generic linguistic tasks and providing context continuity, while other experts are dynamically chosen for specialized queries.

Fine-Grained Expert Segmentation

Experts are grouped according to semantic or domain slices: business, science, technology, languages, etc. This structured segmentation improves accuracy for domain-specific reasoning.

Optimized Parallelism

DeepSeek‑MoE is designed for multi-GPU and multi-node deployment, minimizing inter-GPU communication overhead and ensuring near-linear scaling in large clusters.

Conceptual Block Diagram

| Component | Function |

| Input Layer | Accepts raw text input |

| Gating Network | Selects relevant expert modules dynamically |

| Expert Layers | Activate only the chosen experts per token |

| Shared Experts | Provide general-purpose knowledge across all inputs |

| Output Layer | Generates final predictions or responses |

This modular design achieves high efficiency without sacrificing accuracy.

How DeepSeek‑MoE Operates: Example Workflow

A user submits a textual prompt. The gating network evaluates the input and selects 3–5 Relevant expert modules. The chosen experts process the tokens in parallel. Shared experts inject general knowledge to complement specialized outputs. The output layer aggregates results, producing a coherent, contextually accurate response.

Performance Benchmarks & Advantages

Comparative Benchmark Table

| Metric | DeepSeek‑MoE | GPT‑4 | LLaMA |

| Parameter Activation | Sparse | Dense | Dense |

| Compute per Token | 30–50% lower | High | High |

| GPU Memory Usage | Reduced | High | Moderate |

| Inference Latency | Lower / Faster | Moderate | Moderate |

| Scalability | Excellent | Limited | Limited |

Key Performance Benefits

Cost Efficiency

Sparse activation drastically reduces GPU hours and energy expenditure, enabling more economical deployments.

High Scalability

Ultra-large parameter models are feasible without exponential cost growth.

Superior Domain Accuracy

Specialized experts enhance domain-specific reasoning, outperforming dense general-purpose models in vertical applications.

5. DeepSeek‑MoE vs Dense Models (GPT‑4 & LLaMA)

| Feature | DeepSeek‑MoE | GPT‑4 / LLaMA |

| Activation | Sparse | Full |

| Cost Efficiency | High | Higher cost |

| Task Specialization | Expert-level | General-purpose |

| Inference Speed | Faster | Slower |

| Hardware Requirements | Flexible | High |

| Optimal Use Case | Enterprise/niche | Broad AI tasks |

Practical Use Cases & Applications

Enterprise AI

- Chatbots: Context-aware, cost-efficient, capable of remembering long histories.

- Knowledge Management: Summarize corporate documents, understand internal jargon, and generate actionable insights.

- Customer Support Systems: Faster, intelligent replies with product-category experts.

Open-Source & Academic AI

- Small GPUs can run DeepSeek‑MoE efficiently, ideal for research labs and universities.

- Enables rapid experimentation and model fine-tuning.

- Useful for educational AI tools, providing students access to specialized AI without high costs.

Specialized Domain AI

- Legal Reasoning: Lawyers deploy legal expert modules for accurate legal advice.

- Medical Text Interpretation: Medical experts process terminology efficiently.

- Code Generation: Specialized programming experts enhance accuracy.

- Multilingual Translation: Language experts optimize translation quality.

Engineering Complexity

- Communication overhead in all-to-all GPU exchanges.

- Training complexity due to expert balancing.

- Deployment complications on hardware optimized for dense models.

Debugging Challenges

Sparse expert activation makes tracing errors more difficult, as only a fraction of the network is active for any input.

The Future of MoE and DeepSeek

- Improved routing algorithms for dynamic expert selection.

- Faster distributed training with minimal communication overhead.

- User-friendly open-source tools for democratized access.

Pros & Cons

Advantages

Efficient computation

Lower operational costs

Superior task specialization

Scalable to ultra-large sizes

Open-source friendly

Limitations

Complex training procedures

Advanced hardware is often required

Debugging and monitoring are challenging

FAQs

A: Its sparse activation makes it ideal for small teams without access to massive GPUs.

A: While GPT‑4 excels in broad tasks, DeepSeek‑MoE often surpasses GPT‑4 in domain-specific performance at lower cost.

A: You can train experts for different languages for high-quality multilingual processing.

A: High-memory GPUs (40GB+) are optimal, but smaller setups can work with optimization and quantization.

A: But performance depends on the number of active experts and the tuning of the infrastructure.

Conclusion:

DeepSeek‑MoE is not merely a new AI model — it represents a paradigm shift in building intelligent systems. It maintains the power of large LLMs while making them:

Faster

More economical

Domain-specialized

Open-source

Scalable

Developers, firm architects, and students alike can leverage DeepSeek‑MoE to build bright, affordable, and highly specialized AI solutions. Start walking, train your own experts, deploy in real-world functions, and contribute to the open-source nation. The AI of 2026 is Efficient, specialized, and collaborative—powered by DeepSeek-MoE.