Introduction

Artificial intelligence is progressing at an extraordinary velocity. Each year, emerging large language models (LLMs) claim superior Reasoning depth, expanded contextual awareness, improved alignment mechanisms, and enterprise-grade scalability. Yet only a handful of iterations genuinely transform professional workflows.

Claude 2.1 does exactly that.

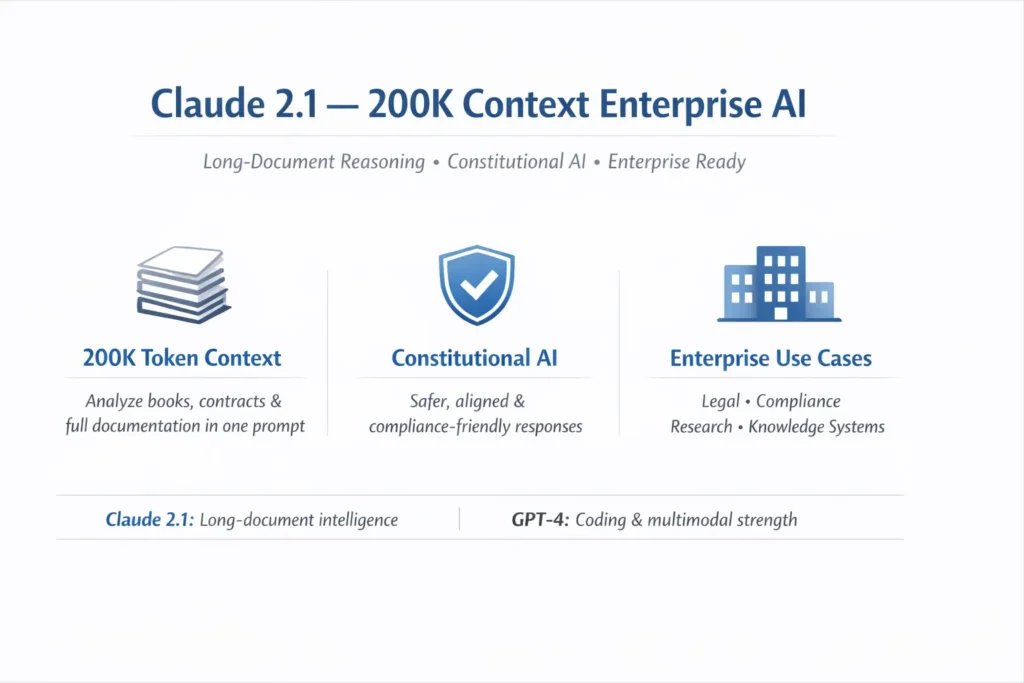

Engineered by Anthropic, Claude 2.1 introduced one of the most pragmatic advancements in modern generative AI: a 200,000-token context window. This enhancement fundamentally reshaped how enterprises, legal analysts, compliance officers, researchers, consultants, and software engineers process voluminous documentation and interconnected knowledge ecosystems.

Claude 2.1 can ingest and reason across entire books, regulatory frameworks, contracts, policy libraries, or documentation repositories in a unified inference cycle.

But critical strategic questions remain:

- Is Claude 2.1 superior to GPT-4 for enterprise intelligence?

- Does a 200K token context window materially impact operational productivity?

- Which industries gain the most tangible value?

- Is Claude 2.1 still strategically relevant in 2026?

This comprehensive pillar guide delivers deep technical insight, NLP-driven explanation, SEO-optimized structure, and enterprise-level clarity — while remaining accessible and readable.

What Is Claude 2.1?

Explained in Clear, Practical Language

Claude 2.1 is an advanced transformer-based Large Language Model (LLM) developed as a substantial upgrade to Claude 2 by Anthropic.

It emphasizes:

- Massive contextual processing (200,000 tokens)

- Lower hallucination incidence

- Enhanced instruction adherence

- Stronger safety alignment

- Enterprise-grade reliability and stability

While competitors frequently highlight multimodal demonstrations or creative novelty features, Claude 2.1 prioritizes document-scale reasoning precision, operational robustness, and compliance-sensitive deployment.

In Simple Terms:

Claude 2.1 is optimized for organizations managing extensive documentation, regulatory-heavy industries, institutional knowledge bases, and structured information systems.

Claude 2.1 at a Glance

| Feature | Claude 2.1 |

| Developer | Anthropic |

| Model Type | Transformer-based Large Language Model |

| Context Window | 200,000 tokens |

| Training Philosophy | Constitutional AI |

| Primary Strength | Long-document reasoning |

| API Access | Yes |

| Web Interface | Claude.ai |

| Best For | Legal, enterprise, compliance, research |

The Breakthrough Innovation: 200K Token Context Window

What Does 200,000 Tokens Actually Represent?

A token approximates three-quarters of a word in English.

Therefore, 200,000 tokens correspond to roughly:

- 150,000+ words

- A complete full-length novel

- 300–400 pages of dense technical material

- Entire corporate documentation repositories

Earlier versions of OpenAI’s GPT-4 supported significantly smaller context windows (depending on configuration). That limitation required:

- Manual document segmentation

- Loss of cross-referential relationships

- Increased hallucination probability

- Complex retrieval engineering

Claude 2.1 meaningfully reduces these operational frictions.

Why 200K Context Matters in Practical Workflows

Contextual scale alone does not guarantee intelligence — but when deployed strategically, it enables document-level cognition rather than paragraph-level recall.

Let’s examine concrete scenarios.

Legal Sector & Contract Analytics

Law firms regularly manage:

- Multi-hundred-page agreements

- Addenda and amendments

- Regulatory compliance documents

- Cross-referenced policy manuals

With Claude 2.1, legal teams can:

- Upload entire contracts in one prompt

- Compare clauses across documents

- Detect contradictory obligations

- Extract liabilities and deadlines

- Identify compliance exposures

Because the model retains full-document awareness, semantic dependencies across sections remain intact.

This reduces clause-level hallucination risk and enhances cross-document logical consistency.

Enterprise Knowledge Infrastructure

Large organizations maintain:

- HR frameworks

- Standard operating procedures (SOPs)

- Internal compliance standards

- Technical architecture documentation

- Governance manuals

Claude 2.1 enables:

- Full-document ingestion of policy systems

- Internal Q&A bots with global document awareness

- Knowledge retrieval without aggressive chunking

- Automated compliance validation

This streamlines operational workflows and reduces manual dependency.

Academic Research & Literature Synthesis

Researchers often need to:

- Compare multiple academic papers simultaneously

- Perform meta-analysis

- Identify methodological contradictions

- Draft structured literature reviews

With a 200K token capacity, Claude 2.1 can analyze multiple research papers within a unified context frame.

This enhances semantic cohesion, citation mapping, and cross-document inference.

Software Engineering & Codebase Understanding

Developers frequently encounter:

- Legacy repositories

- Monolithic documentation systems

- Poorly documented architecture

Claude 2.1 can:

- Summarize large documentation repositories

- Explain architectural patterns

- Generate onboarding guides

- Provide contextualized documentation rewriting

However, for advanced debugging and algorithmic synthesis, GPT-4 may still demonstrate stronger computational reasoning.

Important Technical Clarification

A larger context window does not inherently equal higher reasoning intelligence.

Trade-offs include:

- Increased inference latency

- Higher token-based cost

- Greater prompt-structure sensitivity

- Potential attention dilution in extremely long contexts

Yet when optimized properly, the 200K window unlocks high-fidelity document-level analysis.

Constitutional AI — The Foundational Alignment Framework

Claude 2.1 is trained using a framework called Constitutional AI, introduced by Anthropic and detailed in research published on arXiv.

Unlike purely RLHF-driven systems, Constitutional AI integrates:

- Explicit ethical principles

- Self-critique mechanisms

- Iterative alignment refinement

- Policy-based response constraints

This means Claude 2.1 can:

- Evaluate its own output

- Provide transparent refusal reasoning

- Reduce unsafe or misleading completions

- Align responses with pre-defined ethical guidelines

Why Constitutional AI Matters for Enterprises

Enterprise deployment requires:

- Predictable behavioral boundaries

- Audit-friendly responses

- Clear refusal logic

- Reduced misinformation risk

Claude 2.1 is widely regarded as having:

- Clearer refusal explanations

- Stable tone consistency

- Lower hallucination frequency in policy-sensitive contexts

For compliance-heavy sectors, this reliability reduces legal exposure.

Claude 2.1 Benchmarks & Performance Characteristics

While raw benchmark dominance fluctuates across evaluation cycles, Claude 2.1 performs strongly in:

- Long-form reasoning

- Reading comprehension

- Legal-style analytical tasks

- Policy interpretation

- Academic summarization

It may not lead to:

- Competitive coding challenges

- Advanced mathematical proofs

- Multimodal reasoning (vision + text)

Its competitive edge lies in contextual consistency across extended inputs.

In enterprise systems, consistency often outweighs marginal benchmark superiority.

Claude 2.1 vs GPT-4 — Enterprise Comparison

Below is a strategic comparison between Claude 2.1 and GPT-4 by OpenAI.

| Category | Claude 2.1 | GPT-4 |

| Context Window | 200K tokens | Smaller (varies by deployment) |

| Long-Document Analysis | Exceptional | Strong |

| Coding Performance | Solid | Often stronger |

| Safety Refusal Clarity | High | Moderate to strong |

| Multimodal Support | Limited | Strong |

| Enterprise Stability | High | High |

| Hallucination Control | Improved | Competitive |

Where Claude 2.1 Dominates

- Contract review

- Multi-document synthesis

- Regulatory compliance workflows

- Knowledge-base integration

Where GPT-4 May Lead

- Advanced debugging

- Creative writing diversity

- Mathematical reasoning

- Vision-text multimodal tasks

There is no universal winner — only use-case alignment.

Simplified Architectural Overview

Claude 2.1 incorporates:

- Transformer-based neural architecture

- Large-scale pretraining across diverse corpora

- Constitutional AI fine-tuning

- Reinforcement learning refinement

- Long-context attention optimization

Scaling to 200K tokens required innovation in:

- Attention efficiency

- Memory allocation mechanisms

- Computational stability

- Semantic coherence across large sequences

This was not a superficial upgrade — it required deep systems engineering evolution.

Real-World Use Cases of Claude 2.1

Legal & Contract Review

Law firms utilize Claude 2.1 to:

- Compare agreements

- Extract risk clauses

- Detect contradictory Obligations

- Summarize case materials

Because full contracts remain intact in context, semantic fragmentation decreases.

Enterprise Knowledge Assistants

Organizations integrate Claude 2.1 via API to:

- Answer employee policy questions

- Interpret HR documentation

- Automate compliance validation

- Provide internal support automation

This reduces support load and accelerates decision-making velocity.

Academic Research & Analysis

Claude 2.1 supports:

- Literature review drafting

- Cross-paper synthesis

- Citation-aware summarization

- Methodological comparison

Long-context reasoning improves intellectual coherence.

Financial Compliance & Risk Assessment

Financial institutions benefit from:

- Regulatory documentation review

- Audit summarization

- Risk detection

- Policy cross-checking

Alignment stability is especially critical here.

Documentation-Heavy Technical Systems

Technology companies deploy Claude 2.1 for:

- Repository summarization

- Architecture explanation

- Technical documentation rewriting

- Onboarding content generation

Pricing Overview

Claude 2.1 is accessible via:

- Claude.ai interface

- Anthropic API

- Enterprise contracts

Pricing depends on:

- Input tokens

- Output tokens

- Total volume usage

For 200K-token workflows, careful cost modeling is essential.

Strengths of Claude 2.1

- Massive 200K context window

- Strong document-level reasoning

- Lower hallucination rate

- Clear refusal explanations

- Enterprise-grade alignment

- Compliance-friendly design

Weaknesses

- Not the strongest coding model

- Increased latency at full context capacity

- Limited multimodal functionality

- Higher cost for extremely long prompts

- Not benchmark-dominant across all categories

Who Should Use Claude 2.1?

Ideal For:

- Law firms

- Compliance departments

- Research institutions

- Enterprise IT teams

- Documentation-intensive organizations

Less Ideal For:

- Competitive algorithm developers

- Image-generation workflows

- Advanced math-heavy research tasks

Is Claude 2.1 Worth It in 2026?

If your operational workflows depend on:

- Large document ingestion

- Compliance sensitivity

- Cross-document synthesis

- Stable enterprise deployment

Claude 2.1 remains one of the most practically valuable LLMs available.

It is not designed for spectacle.

It is designed for dependable intelligence at scale.

FAQs

A: Claude 2.1 supports up to 200,000 tokens, making it one of the largest-context language models available.

A: It depends on your use case. Claude 2.1 excels at long-document reasoning, while GPT-4 may outperform in coding and complex logic tasks.

A: It is capable of documentation-heavy or explanation tasks, but not industry-leading for advanced debugging challenges.

A: Its 200K token context window allows ingestion of book-length documents in many cases.

A: Its Constitutional AI framework makes it particularly suited for compliance-heavy environments.

Conclusion

Claude 2.1 (2026) redefines the AI landscape with its massive 200K token context window, advanced Constitutional AI framework, and superior reasoning capabilities. Offering reliable benchmarks, competitive performance against GPT-4, and enterprise-ready features, it excels in coding, research, and large-scale deployments, making it a top choice for developers, businesses, and AI architects seeking cutting-edge Efficiency and scalability.