Introduction

Artificial intelligence is getting better fast. New ideas are coming out all the time. The basic models are being changed and improved to handle more computer work. When we talk about models that can be changed by anyone, one type of model is being talked about by Developers more than any other: Meta Platforms’ Llama series. Meta Platforms’ Llama series is really popular with developers now.

In 2026, one core comparison defines strategic AI adoption decisions:

Should you choose Llama 3.2 or Llama 3.1?

These things seem similar at first. They are part of the family of transformer models that can generate things. They are both made to be used in systems.

However, beneath that similarity lies a fundamental divergence in design philosophy.

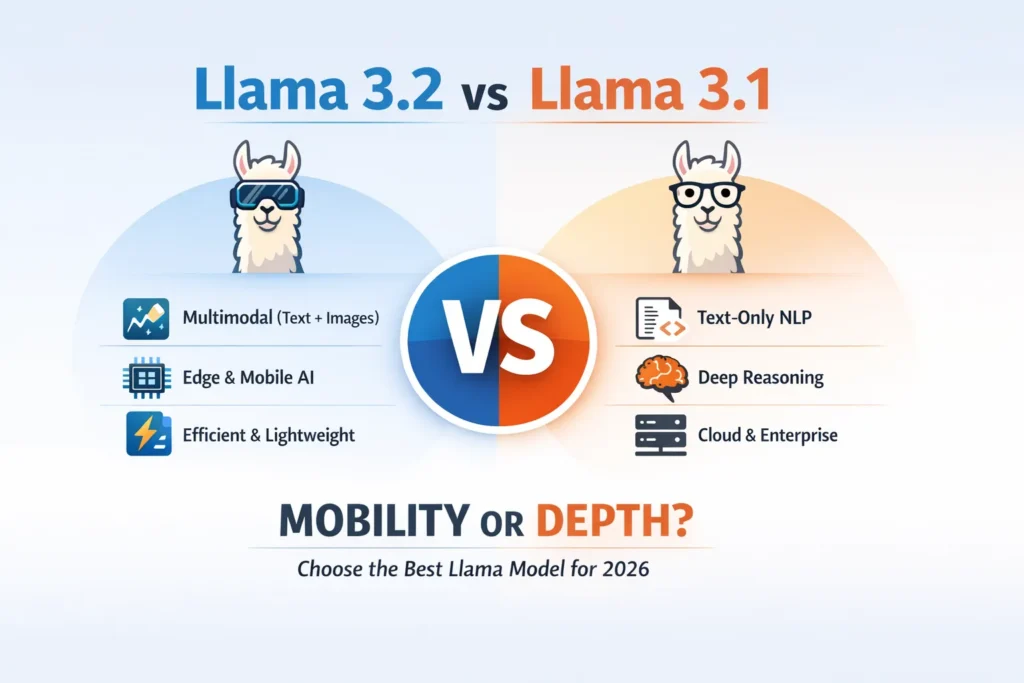

The Llama 3.1 system is really good at understanding language. It can make sense of a lot of information. Think about it in a smart way. This is useful for computer systems that are used by a lot of people. The Llama 3.1 can handle language and think deeply about what it means. It is also good at understanding things that are related to each other, even if they are apart. This makes the Llama 3.1 a tool for big computer systems.

Llama 3.2 introduces multimodal intelligence (text + vision), optimized parameter efficiency, and edge-device readiness.

You’ll discover:

- Core architectural differences

- Transformer-level optimization insights

- Multimodal integration frameworks

- Reasoning vs efficiency tradeoffs

- Edge vs cloud deployment dynamics

- Benchmark interpretation

- Pros, cons & deployment matrix

What Is Llama 3.1?

Llama 3.1 is a large-scale autoregressive language model engineered primarily for advanced natural language processing workloads. It builds upon the transformer backbone with refined instruction tuning and improved stability during long-context inference.

It concentrates on:

- Text generation

- Extended context reasoning

- Code synthesis

- Structured output formatting

- Enterprise automation

- Retrieval-augmented generation (RAG) pipelines

Technically, Llama 3.1 emphasizes:

- Increased parameter density

- Improved attention calibration

- Higher logical consistency

- Reduced hallucination frequency

- Enhanced alignment fine-tuning

Key Strengths of Llama 3.1

- High-capacity parameter variants optimized for GPU clusters

- Strong benchmark positioning in reasoning datasets

- Refined instruction adherence

- Reliable structured JSON outputs

- Effective chain-of-thought modeling

Llama 3.1 is text-exclusive. That specialization allows deeper semantic modeling, syntactic robustness, and contextual coherence.

What Is Llama 3.2?

Llama 3.2 represents a strategic evolution toward multimodal and efficient AI systems.

While retaining strong textual modeling, it expands into:

- Vision-language integration

- Smaller parameter tiers (1B & 3B variants)

- On-device inference capability

- Optimized performance-per-watt

- Reduced memory overhead

This shift introduces multimodal embedding alignment, allowing joint representation learning across visual and textual domains.

Key Strengths of Llama 3.2

- Native image-text understanding

- Edge-optimized inference

- Lightweight parameter configurations

- Lower computational cost

- Faster response latency

Where Llama 3.1 maximizes depth, Llama 3.2 maximizes adaptability.

Llama 3.2 vs Llama 3.1 Direct Feature Comparison

| Feature | Llama 3.1 | Llama 3.2 |

| Primary Objective | Deep textual reasoning | Multimodal & efficiency |

| Text Generation | ✅ Advanced | ✅ Advanced |

| Image Understanding | ❌ No | ✅ Yes |

| Small Parameter Models | Limited | ✅ 1B–3B optimized |

| Large Parameter Models | ✅ Yes | ✅ Yes |

| Edge Deployment | Moderate | High |

| Cloud Scalability | Excellent | Excellent |

| Vision Tasks | ❌ | ✅ |

| Ideal For | Complex systems | Vision + mobile AI |

Core Distinctio

- Llama 3.1 optimizes textual intelligence.

- Llama 3.2 extends capability into multimodal cognition and resource-efficient deployment.

Architectural & Technical Evolution

Understanding transformer-level mechanics clarifies their divergence.

Parameter Scaling & Computational Design

Llama 3.1

- Larger parameter distributions

- Higher attention head density

- Improved token prediction calibration

- Optimized for distributed GPU infrastructure

Its expanded architecture enhances representational richness, contextual abstraction, and inferential precision.

Ideal for:

- Legal document modeling

- Scientific literature analysis

- Advanced code reasoning

- Enterprise-scale pipelines

Llama 3.2

Llama 3.2 introduces parameter efficiency optimization.

This means:

- Improved compute Utilization

- Enhanced throughput

- Lower VRAM requirements

- Reduced energy consumption

The architecture balances compression with capability, ensuring that smaller models maintain practical intelligence without dramatic degradation.

Multimodal Capabilities

This is the defining structural advancement.

Llama 3.1

- Text-only transformer

- No image encoder

- No cross-modal attention layers

It cannot process visual tokens.

Llama 3.2

- Integrates a vision encoder

- Uses cross-attention fusion layers

- Aligns visual embeddings with textual tokens

This enables:

- Image caption generation

- Visual question answering

- Document OCR interpretation

- Screenshot contextualization

- E-commerce tagging systems

- UI design inspection

For applications involving visual inputs, Llama 3.2 is not optional — it is foundational.

Benchmark & Performance Trends

Exact benchmark values vary depending on quantization, fine-tuning, and dataset configuration. However, macro-level tendencies reveal patterns.

Reasoning

Llama 3.1 demonstrates superior performance in:

- Multi-step logical deduction

- Code generation accuracy

- Long-form coherence

- Complex instruction adherence

- Structured data formatting

Its deeper transformer layers improve abstraction capability, symbolic reasoning approximation, and consistency across extended prompts.

Latency & Efficiency

Llama 3.2 excels in:

- Lower latency responses

- CPU-level inference

- Edge AI deployment

- Reduced power consumption

Smaller models (1B–3B) enable rapid summarization and real-time interactions on constrained hardware.

Practical Use Cases

Let’s translate theory into deployment decisions.

When to Choose Llama 3.2

Ideal for:

- Mobile AI assistants

- Vision-enabled chatbots

- Document parsing apps

- Retail image recognition systems

- AR/VR assistants

- On-device inference solutions

Example:

A startup building a shopping application that analyzes product photos would benefit significantly from Llama 3.2’s multimodal alignment.

When to Choose Llama 3.1

Best suited for:

- Enterprise legal research systems

- Financial analysis automation

- Coding copilots

- Scientific reasoning assistants

- Academic research workflows

Example:

A SaaS platform developing a compliance-aware legal AI would favor Llama 3.1.

Hardware & Deployment Considerations

Infrastructure alignment determines cost efficiency.

Cloud Deployment

Both models perform well in cloud ecosystems.

Llama 3.1 advantages:

- GPU cluster optimization

- Large context modeling

- Distributed inference stability

Llama 3.2 advantages:

- Hybrid workload compatibility

- Efficient scaling

- Lower compute Footprint

Edge & On-Device AI

Here, Llama 3.2 dominates.

It provides:

- Smaller memory consumption

- Faster CPU throughput

- Quantization friendliness

- Minimal GPU reliance

For budget-restricted startups, Llama 3.2 significantly lowers infrastructure overhead.

Pros & Cons

Pros

- Superior reasoning depth

- Strong code generation

- Mature optimization

- Enterprise-grade reliability

Cons

- No vision support

- Higher hardware demands

- Limited edge flexibility

Pros

- Multimodal processing

- Edge-ready architecture

- Efficient smaller models

- Reduced deployment cost

Cons

- Slight reasoning compromise

- Increased architectural complexity

- Multimodal tuning requirements

Decision Matrix: Which Model Should You Pick?

| Scenario | Best Model | Reason |

| Vision Applications | Llama 3.2 | Native multimodal support |

| Deep Research | Llama 3.1 | Advanced reasoning |

| Coding Assistant | Llama 3.1 | Logical precision |

| Mobile AI App | Llama 3.2 | Lightweight deployment |

| Startup MVP | Llama 3.2 | Cost efficiency |

| Enterprise | Llama 3.1 | Stability & scale |

Future Outlook of the Llama Ecosystem

The trajectory of Meta AI suggests:

- Expanded multimodal integration

- Larger context windows

- Higher efficiency per parameter

- Improved fine-tuning tooling

Multimodal systems represent the next evolutionary stage in generative AI. Llama 3.2 embodies this transition.

Yet, high-capacity reasoning systems, such as Llama 3.1, remain indispensable for enterprise-grade deployments.

The future is likely to converge toward hybrid architectures that merge deep reasoning with multimodal perception.

Strategic Analysis Depth vs Adaptability

Zooming out reveals two complementary strategies:

Depth & Precision

Focused on linguistic mastery, inferential rigor, and high-capacity modeling.

Flexibility & Multimodality

Focused on adaptability, efficiency, and real-world application breadth.

Both strategies serve distinct operational needs.

FAQs

A: Not universally. Llama 3.2 is better for multimodal and edge applications, while Llama 3.1 is stronger for deep text reasoning.

A: Llama 3.1 is text-only.

A: If building mobile or vision apps → Llama 3.2.

If building coding assistants → Llama 3.1.

A: Smaller variants are optimized for performance-per-compute.

A: Multimodal AI gives Llama 3.2 broader flexibility. But both models remain relevant.

Conclusion

Choosing between Llama 3.1 and Llama 3.2 is not about selecting a universally superior model; it is about aligning technical capability with strategic intent.

If your organization prioritizes:

- Deep analytical reasoning

- Long-context document Comprehension

- High-precision coding assistance

- Enterprise-scale workflows

- Complex multi-step logic

Then Llama 3.1 remains the stronger candidate. Its larger parameter configurations and reasoning-optimized transformer stack make it ideal for cloud-based, compute-rich environments.