Introduction

When the average user hears the name Claude AI, their mind usually jumps to Claude 3, Claude Opus, or Anthropic’s most recent high-performance reasoning systems. Claude 1, these modern versions are fast, deeply capable, multimodal, and optimized for advanced workflows. However, Claude 1 very few discussions explore where Claude truly began.

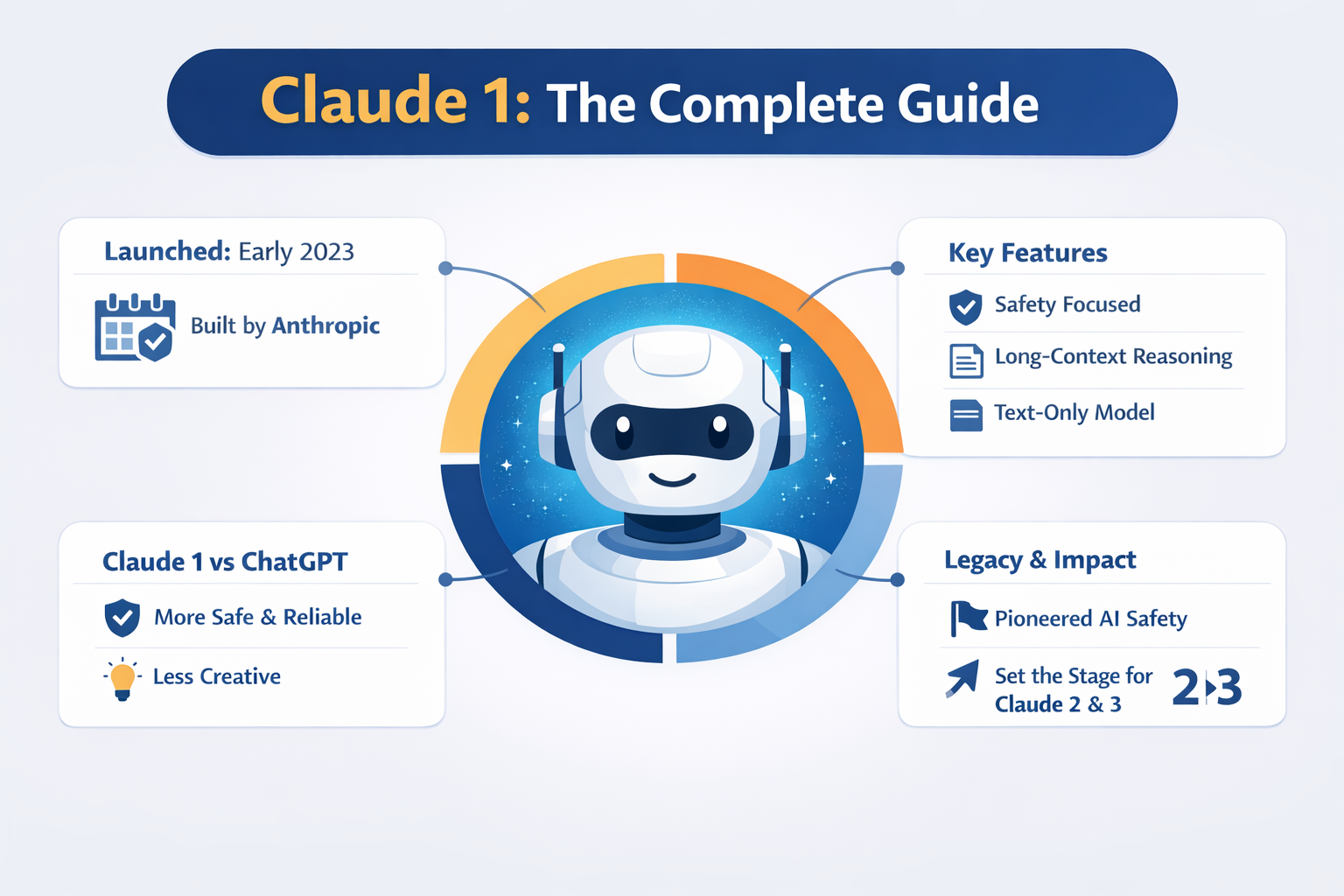

Claude 1 was not just Anthropic’s first publicly usable large language model — it was a philosophical experiment, a technical foundation, and a safety-first counterbalance to how AI development was unfolding at the time.

While many early AI systems raced toward scale, creativity, and viral adoption, Claude 1 was intentionally built with restraint. Anthropic introduced a fundamentally new approach to alignment known as Constitutional AI, where models learn to follow structured ethical principles instead of relying exclusively on large volumes of human feedback.

Although it is no longer publicly accessible, its design principles, alignment strategies, and safety mechanisms remain embedded in modern systems and continue to influence the broader AI research ecosystem.

- What actually was

- Why Anthropic created it

- How Claude 1 functioned at a technical level

- Its core strengths and structural weaknesses

- Claude 1 vs ChatGPT and other competing models

- Practical, real-world use cases

- Why was Claude 1 eventually replaced

- The long-term legacy left behind

What Is Claude 1?

Claude 1 was the first-generation large language model (LLM) developed by Anthropic, an AI research and deployment company headquartered in San Francisco.

- Helpful

- Honest

- Harmless

Key Identity of Claude 1

| Attribute | Details |

| Developer | Anthropic |

| Category | Large Language Model (LLM) |

| Launch Period | Early 2023 |

| Training Method | Constitutional AI |

| Primary Focus | Safety, alignment, reasoning |

| Multimodal Support | ❌ No |

| Current Status | Deprecated |

Why Anthropic Built

Anthropic was founded by former OpenAI researchers who became increasingly concerned about the trajectory of large-scale AI systems.

- Rapid increases in model capability without proportional safety controls

- Over-reliance on opaque reinforcement learning techniques

- Lack of transparency in refusal behavior and ethical boundaries

- Difficulty scaling human feedback safely

Claude 1 vs Its Contemporaries

- GPT-3 / GPT-3.5

- Early Google Bard

- Meta LLaMA

| Focus Area | Claude 1 | GPT-3 |

| Safety Alignment | Very High | Moderate |

| Creativity | Medium | High |

| Hallucination Rate | Lower | Higher |

| Long Context | Strong | Limited |

Technical Overview

Model Architecture

was built using a transformer-based neural architecture, similar in structure to other large language models of its era.

What differentiated was not the architecture itself, but the training methodology layered on top of it.

Rather than relying predominantly on RLHF, integrated Constitutional AI at the core of its learning process.

Core Technical Capabilities

| Feature | Claude 1 |

| Architecture | Transformer-based LLM |

| Context Window | Larger than GPT-3 (for its time) |

| Multimodal Support | ❌ No |

| Training Focus | Alignment & safety |

| Output Style | Calm, structured, neutral |

Claude 1 Strengths: What It Did Best

Strong Text Reasoning

- Long-form responses

- Clear step-by-step Logic

- Policy-sensitive discussions

- Ethical reasoning tasks

High Safety & Ethical Standards

- Fewer unsafe outputs

- Less speculative or risky advice

- More consistent ethical boundaries

- Legal organizations

- Financial institutions

- Healthcare documentation

- Compliance-heavy environments

Long Context Understanding

- Document-level summarization

- Policy analysis

- Research synthesis

- Knowledge base querying

Calm, Professional Tone

- Neutral language

- Professional phrasing

- Low emotional variance

- Clear structure

No Multimodal Capabilities

- No image understanding

- No audio input/output

- No video processing

Limited Ecosystem

- A plugin marketplace

- Broad third-party developer tooling

- Extensive API customization

- Consumer-facing integrations

Over-Cautious Behavior

- Refusing benign requests

- Providing overly generalized answers

- Avoiding creative or speculative discussion

Hallucinations Still Existed

- Factual inaccuracies

- Outdated information

- Subtle technical errors

Limited Creativity

- Too restrained

- Less imaginative

- Overly formal

Claude 1 vs ChatGPT (GPT-3)

| Feature | Claude 1 | ChatGPT (GPT-3) |

| Safety Alignment | ⭐⭐⭐⭐☆ | ⭐⭐⭐ |

| Creativity | ⭐⭐⭐ | ⭐⭐⭐⭐ |

| Context Handling | ⭐⭐⭐⭐ | ⭐⭐⭐ |

| Plugins | ❌ | ✔ |

| Multimodal | ❌ | ❌ |

Claude 1 vs Claude 2

| Area | Claude 1 | Claude 2 |

| Context Window | Large | Much Larger |

| Accuracy | Good | Better |

| Reasoning | Strong | Stronger |

| Availability | Limited | Wider |

Google Gemini Vs Claude 1

| Feature | Claude 1 | Gemini |

| Safety | High | High |

| Multimodal | ❌ | ✔ |

| Ecosystem | Limited | Google-base |

| Enterprise | Moderate | Strong |

Real-World Use Cases of

Content Writing & SEO

- Blog drafting

- Article outlines

- Content summarization

Legal & Policy Drafting

- Contract summaries

- Policy analysis

- Compliance documentation

Research Summarization

- Academic paper summaries

- Report condensation

- Insight extraction

Business Automation

- Customer service scripts

- Internal documentation

- Knowledge base maintenance

Lessons from Claude’s Evolution

- Safety must scale with intelligence

- Over-alignment reduces usability

- Long-context is a major competitive advantage

- Enterprises prioritize reliability over novelty

- Claude 2

- Claude 3

- Claude Opus

Pros & Cons

Pros

Strong safety alignment

Excellent long-form reasoning

Calm, professional tone

Early leader in long-context AI

Cons

No multimodal support

Limited ecosystem

Over-cautious behavior

No longer available

FAQs

A: Claude 1 has been fully replaced by newer Claude models.

A: For safety and long-term reasoning, yes. For creativity and tooling, no.

A: To improve accuracy, context size, usability, and competitiveness.

A: It could assist, but modern models perform far better.

A: It’s a Constitutional AI training and safety-first design philosophy.

Conclusion:

Claude AI was not designed to dominate headlines.

It was designed to prove a principle.

- AI can explain refusals transparently

- Long-context reasoning is transformative

- Safety and usefulness must coexist

While it lacked multimodal features and a massive ecosystem, it has permanently shaped how responsible AI Development is discussed today. Understanding helps explain why modern Claude models behave the way they do — and where AI safety is headed next.