Introduction

The artificial intelligence thing is moving fast. By 2026, it seems like every new large language model that comes out is supposed to be better at figuring things out, working faster, and Understanding stuff. One of the intelligence frameworks that people are talking about a lot right now is Grok, which was thought up and built by xAI, a company that Elon Musk started. Grok is getting a lot of attention, and people are looking at it closely to see what it can do. Artificial intelligence like Grok is changing quickly. It will be interesting to see what happens with it.

Which model should I employ, Grok-2 Mini or Grok-3?

Selecting an inappropriate model can result in unnecessary expenditure, reduced computational throughput, and suboptimal system performance. Conversely, selecting the right model can enhance productivity, reduce operational costs, and elevate the end-user experience.

This in-depth 3500+ word centric guide elucidates:

- Architectural and computational divergences

- Contextual window size and hierarchical reasoning depth

- Coding, mathematical reasoning, and analytical benchmarks

- Cost-performance ratio and pricing tiers

- Real-world deployment scenarios

- Advantages and limitations

- Conclusive guidance tailored to workload requirements

By the conclusion, readers will possess a nuanced understanding of which Grok variant aligns with their 2026 AI deployment objectives.

What Is Grok?

Grok constitutes a lineage of transformer-based large language models meticulously engineered by xAI. It is explicitly designed to rival cutting-edge generative AI models while maintaining deep integration with the X ecosystem (formerly known as Twitter).

The chronological evolution of Grok is as follows:

- Grok-1 – Initial foundational architecture

- Grok-2 – Enhanced reasoning modules and improved contextual understanding

- Grok-2 Mini – Streamlined, efficiency-oriented variant

- Grok-3 – Flagship release with extensive reasoning capacity, ultra-large context, and enhanced multi-modal alignment

The distinction between Grok-2 Mini and Grok-3 is profound: it spans architecture, inference strategy, and practical application.

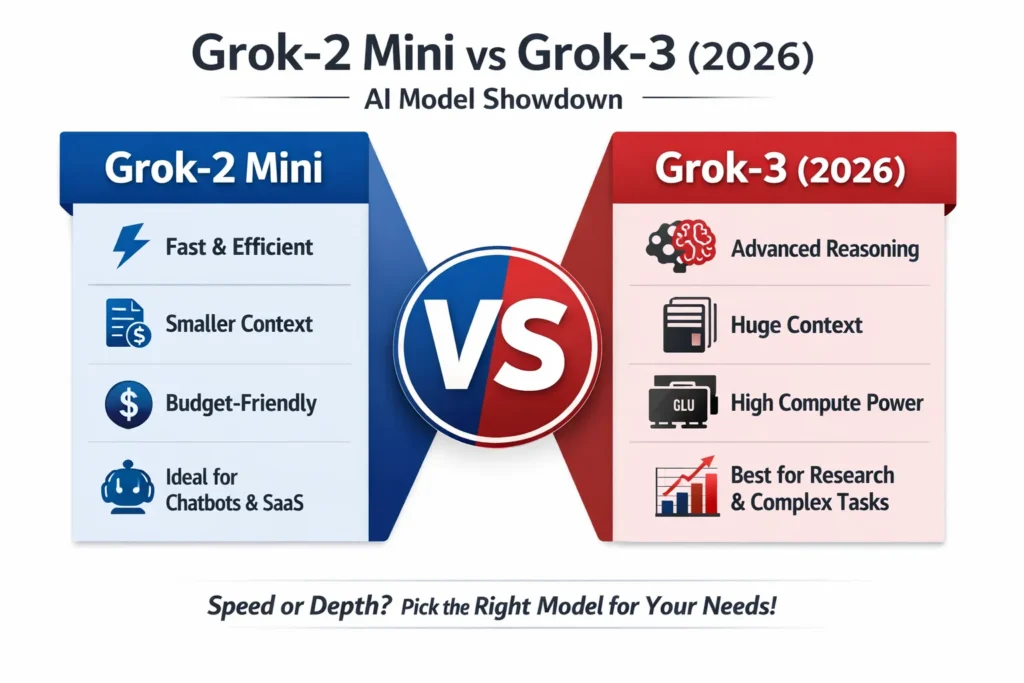

Grok-2 Mini vs Grok-3: Feature Synopsis

For readers seeking rapid clarity, the table below encapsulates the primary centric attributes:

| Feature | Grok-2 Mini | Grok-3 |

| Model Tier | Lightweight / Efficiency-Optimized | Flagship / Frontier |

| Compute Scale | Streamlined, reduced compute | Extensive multi-GPU orchestration |

| Contextual Window | Large (sufficient for most tasks) | Ultra-large (~1M tokens reported) |

| Reasoning Depth | Moderate to High | Advanced multi-step and hierarchical reasoning |

| Inference Speed | Very Fast | Slightly Slower |

| Coding Accuracy | Strong | Excellent |

| Optimal Use Cases | Chatbots, SaaS, cost-efficient AI | Research, in-depth analysis, multi-module coding |

| Pricing Tier | Budget-friendly | Premium-tier |

Quick Takeaway:

- Speed and affordability → Grok-2 Mini

- Maximum reasoning capacity → Grok-3

Architectural Divergences Explained

Computational Power & Model Scale

The preeminent difference between Grok-2 Mini and Grok-3 is the scale of underlying computational resources.

Grok-3 Compute Paradigm:

- Trained on extensive GPU clusters

- Enhanced reasoning fidelity and inference accuracy

- Superior long-form text generation capabilities

- Consistent, reliable code synthesis

- Augmented mathematical and logical problem-solving ability

- Expanded parameter count and larger training data exposure

Grok-2 Mini Compute Paradigm:

- Tailored for cost-efficient inference

- Rapid token generation for high-throughput applications

- Optimized for API scalability

- Sufficient performance without requiring high-end hardware

Simplified Analogy:

- Grok-3 = Cognitive depth and analytical sophistication

- Grok-2 Mini = Operational efficiency and rapid throughput

Contextual Window & Token Capacities

The contextual window determines how much sequential information the model can ingest and process in one pass.

Grok-3 Contextual Strength:

- Capable of handling extremely extensive text spans (approaching 1 million tokens)

- Optimal for:

- Legal or regulatory document analysis

- Multi-chapter research papers

- Complete software codebases

- Financial projections or modeling

- Enterprise knowledge aggregation

Grok-2 Mini Contextual Capacity:

- Sufficient for most workflows:

- Content creation

- Chatbot interactions

- Business question-answering systems

- SaaS-oriented AI features

Why Contextual Capacity Matters:

- Processing a 300-page document → Grok-3 is superior

- Handling chat logs, summaries, or concise Q&A → Grok-2 Mini suffices

Reasoning & Multi-Step Analytical Modes

Grok-3 integrates Advanced reasoning pipelines enabling:

- Multi-step arithmetic and algebra

- Complex logical chains

- Scientific simulation support

- Debugging multi-component systems

- Algorithmic design and analysis

Grok-2 Mini, while capable, offers:

- Moderate reasoning proficiency

- Everyday task applicability without noticeable performance drop

Summary:

- Simple business logic, chatbots, and automation → Grok-2 Mini adequate

- Advanced computational research, scientific modeling, or systemic debugging → Grok-3 preferable

Performance Benchmarks: Coding, Math & Analysis

While raw benchmark scores may fluctuate, empirical use cases reveal distinct trends.

Coding Performance Comparison

Grok-3 Coding Advantages:

- Handles multi-file and multi-module projects efficiently

- Maintains logical consistency across extensive codebases

- Superior debugging and refactoring support

- Architectural planning and design guidance

Grok-2 Mini Coding Advantages:

- Rapid snippet generation

- Efficient for small scripts or automation tasks

- Optimized for SaaS APIs

- Lower computational cost per inference

Illustrative Example:

- Building a full-stack SaaS → Grok-3 preferred

- Creating a chatbot, generating Python code snippets → Grok-2 Mini is sufficient

Mathematical & Logical Reasoning

Grok-3:

- Excels in algebraic problem solving

- Multi-step equations and logical proofs

- Structured reasoning tasks

- Competitive coding environments

Grok-2 Mini:

- Handles arithmetic and business calculations

- Spreadsheet-like analyses

- Standard logic puzzles

- Efficient for routine tasks

Takeaway: For high-stakes math or algorithmic research → Grok-3 is clearly superior

Speed & Latency

Real-time processing and latency-sensitive applications necessitate speed:

Grok-2 Mini:

- Rapid token generation

- Lower latency

- Ideal for high-frequency API calls

Grok-3:

- Slightly slower due to model scale

- Prioritizes reasoning and accuracy over raw throughput

Practical Tip: Systems serving tens of thousands of real-time requests → Grok-2 Mini offers higher cost-efficiency

Real-World Use Cases

When to Choose Grok-2 Mini

Cost-Efficient Deployment:

Startups benefit from reduced GPU usage and lower operational expenditure

High-Speed Chat Interfaces:

Customer support bots, live chat, and social media AI agents

SaaS-Oriented Features:

Summarization, rewriting, email drafting, and business Q&A

High-Volume Environments:

Efficiently handles 100,000+ API calls daily

When Grok-3 Is Advantageous

Scientific & Academic Research:

Requires multi-step reasoning and ultra-long context

Complex Coding Projects:

Multi-module architecture, system design, backend logic debugging

Long-Document Analysis:

Legal contracts, policy manuals, compliance reports

Strategic Decision-Support Systems:

Financial forecasting, risk modeling, corporate analytics

Verdict: For high-complexity tasks, the Additional computational cost is justified

Pricing & Accessibility Analysis

| Tier | Grok-2 Mini | Grok-3 |

| Entry-Level Access | Broad availability | Limited |

| Premium Subscription | Optional | Typically required |

| Inference/Compute Cost | Low | High |

| Optimal Value | SMEs & startups | Enterprises & research divisions |

Cost-Performance Ratio:

- Every day automation → Grok-2 Mini offers superior ROI

- Advanced research, multi-step reasoning, and large-scale coding → Grok-3 offers higher utility

Pros & Cons

Pros:

- High inference speed

- Cost-efficient

- Scales well for chat & SaaS

- Adequate reasoning for standard workflows

Cons:

- Moderate reasoning depth

- Smaller context window

- Slightly weaker in complex coding scenarios

Pros:

- Exceptional reasoning capacity

- Ultra-large context window

- Advanced debugging and code architecture

- Ideal for enterprise and research analysis

Cons:

- Premium cost

- Slight latency overhead

- Overkill for basic tasks

Head-to-Head Oriented Scores

| Category | Grok-2 Mini | Grok-3 |

| Speed | 9 | 7 |

| Reasoning | 7.5 | 9.5 |

| Coding | 8 | 9 |

| Cost-Efficiency | 9 | 6.5 |

| Long Context | 7 | 10 |

Conclusion: There is no universal champion. Choice depends entirely on task complexity and operational needs.

Developer Decision Framework

- Budget Assessment → Limited → Grok-2 Mini, Enterprise → Grok-3

- Contextual Needs → Extensive documents → Grok-3, Conversational AI → Grok-2 Mini

- Task Complexity → Deep multi-step reasoning → Grok-3, Routine automation → Grok-2 Mini

- Real-Time Speed Requirement → High throughput → Grok-2 Mini, Depth priority → Grok-3

Grok-2 Mini vs Grok-3 for Distinct Users

Startups:

- Deploy Grok-2 Mini initially; scale to Grok-3 if complexity rises

Enterprises:

- Strategically combine:

- Mini for customer-facing services

- Grok-3 for backend analysis

Developers:

- Heavy coding and algorithmic tasks → Grok-3

- Script generation and automation → Grok-2 Mini

Researchers:

- Multi-step reasoning, document analysis → Grok-3

FAQs

A: Grok-3 is the flagship, while Mini versions prioritize efficiency and lower compute usage.

A: Complex backend systems → Grok-3, everyday scripts → Grok-2 Mini

A: Grok-2 Mini has lower inference and operational compute costs.

A: Not necessarily. For basic or real-time tasks, Grok-2 Mini may match or exceed in efficiency.

A: Both models are enterprise-ready, but Grok-3 excels for research-heavy or high-complexity workflows.

Conclusion

Selecting between Grok-2 Mini and Grok-3 ultimately hinges on your workload, budget, and performance priorities. Both models are exceptional LLMs within the xAI ecosystem, but they cater to different operational paradigms: efficiency versus depth.

Grok-2 Mini shines when speed, cost-effectiveness, and high-throughput Deployment matter. It is ideal for startups, SaaS applications, chatbots, and scenarios requiring frequent API calls. Its smaller reasoning footprint and moderate context window make it perfect for tasks that do not demand multi-step, hierarchical problem-solving.

Grok-3, on the other hand, is engineered for cognitive depth, advanced reasoning, and ultra-long context comprehension. Researchers, enterprises, and developers working on multi-module coding, complex algorithmic tasks, or large-document analysis will benefit from its flagship capabilities. Though premium-priced and slightly slower in token generation, the model delivers unmatched accuracy and analytical sophistication.