Introduction

Faster than ever, artificial intelligence moves forward. Year after year, new AI systems appear – each more Powerful, swift, and complex. One of today’s most talked-about models is Grok, built by xAI, now part of the X platform. People who look ahead in different fields are starting to wonder the same thing:

Which model should you adopt — Grok‑3 Mini or Grok‑3.5?

You are a developer who wants to build things that can handle a lot of users a startup founder who needs intelligence tools that do not cost too much a researcher who has to analyze a lot of data, an enterprise decision maker who has to make sure they get a good return, on their investment and that things work well or just someone who likes artificial intelligence and wants to know what is going on. Your choice of intelligence matters. It influences:

- Performance and fluency

- Response speed

- Cost and compute efficiency

- Scalability and long‑term value

In this exhaustive SEO‑optimized reference guide, we provide a lucid, jargon‑friendly breakdown of Grok‑3 Mini vs Grok‑3.5. You’ll walk away with definitive insights into:

- What Grok‑3 Mini actually is

- How Grok‑3.5 advances the technology

- Side‑by‑side feature and capability comparisons

- Empirical benchmarking observations

- Expected pricing dynamics in 2026

- Real‑world deployment patterns

- Advantages and limitations of each

- FAQs and the final expert recommendation

Let’s embark on this comparative exploration.

The Grok Ecosystem Foundational Context You Must Understand

Before diving into the specifics of Grok‑3 Mini vs Grok‑3.5, you need context. Grok is not just another AI model; it’s a purpose‑built conversational intelligence ecosystem developed by xAI, deeply integrated with the social and information threads of the X platform.

Grok’s design mantra includes:

- Delivering contextually relevant responses in real time

- Augmenting knowledge with live, ever‑fresh web data

- Exhibiting advanced reasoning and analytic depth

Grok Model Evolution

Here is the lineage of Grok development:

- Grok‑1 – The original version establishing real‑time interaction

- Grok‑2 – Performance and stability refinement

- Grok‑3 – Substantive expansion of reasoning and context

- Grok‑3 Mini – Streamlined, efficient variation

- Grok‑3.5 – Intelligence‑centric evolution

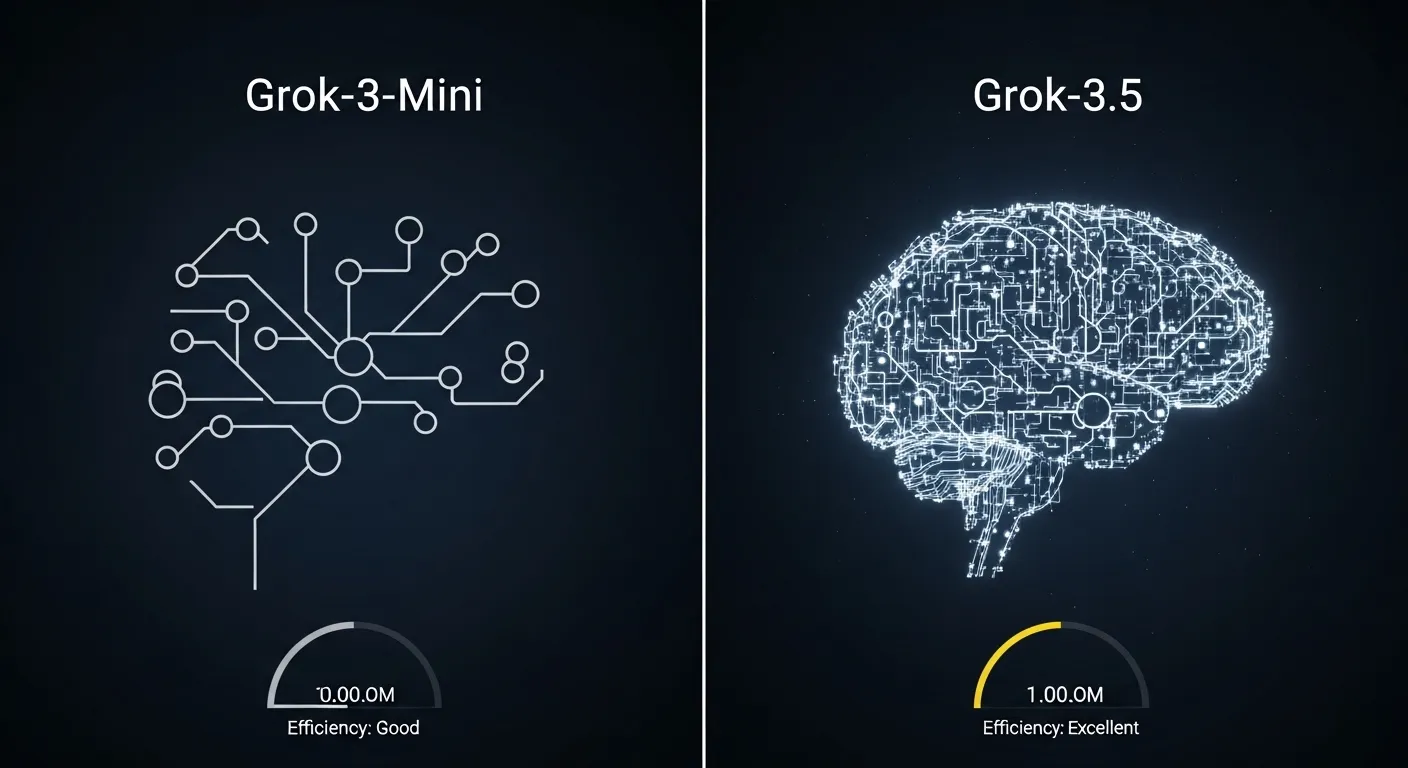

Over time, the Grok ecosystem bifurcated into two strategic directions:

- Efficiency branch → Grok‑3 Mini

- Intelligence branch → Grok‑3.5

This bifurcation is key to grasping their differences.

What Is Grok‑3 Mini?

Grok‑3 Mini is the lightweight, highly optimized variant of the Grok‑3 series. It is engineered for:

- Minimal latency

- High throughput

- Cost‑efficient API consumption

- Scalable production environments

Think of Grok-3 Mini as a finely‑tuned sports vehicle specifically built for speed and operational efficiency, not the largest cargo or heaviest computational load.

Core Characteristics of Grok-3 Mini

Here are its essential attributes:

Optimized for rapid results

Strong inferential reasoning given its footprint

Generous (reported ~1M token) context processing

What Is Grok-3.5?

Grok‑3.5 represents the intelligence‑centric successor in the Grok family. It deepens reasoning, extends multimodal competence, and improves performance on tasks demanding substantial cognitive complexity.

Where Grok‑3 Mini prioritizes throughput, Grok‑3.5 emphasizes depth and understanding — it’s like a high‑end analytical engine built for serious intellectual challenges.

Expected or Confirmed Advancements in Grok‑3.5

Among the primary enhancements are:

Superior logical and mathematical reasoning

Advanced vision and multimodal comprehension

First‑class persistent memory mechanisms

Better retention and handling of lengthy documents

Enhanced multimodal reasoning (text + image + future modalities)

In Simple Terms:

If your needs go beyond transactional Q&A and focus on deep comprehension and multimodal intelligence, Grok‑3.5 is the stronger candidate.

Grok-3 Mini vs Grok-3.5 Head-to-Head Comparison Table

| Feature | Grok‑3 Mini | Grok‑3.5 |

| Primary Focus | Speed & operational efficiency | Reasoning depth & advanced cognition |

| Context Window | ~1M tokens | ~1M tokens (optimized for depth) |

| Response Speed | Very fast | Balanced (slightly slower) |

| Benchmark Accuracy | High | Higher |

| STEM Processing | Strong | Very strong |

| Vision/Multimodal Support | Limited | Advanced |

| Persistent Memory | ❌ No | ✔️ Yes (tiered/premium) |

| Cost | Lower | Premium |

| Ideal Use Cases | Conversational bots & real‑time needs | Research & critical reasoning systems |

Key Insight:

- Mini excels in latency and scalable needs

- 3.5 outperforms in intellectual depth and multimodal understanding

Performance Benchmarks

Benchmark metrics quantify model aptitude across diverse tasks. Although exact numbers can vary by platform configurations and access tier, certain patterns are observable in practice.

Grok-3 Mini Performance

Grok-3 Mini demonstrates strong performance in domains such as:

- Mathematical reasoning

- Automated code synthesis

- Data parsing

- Real‑time question answering

- Context‑aware task processing

It maintains exceptionally fast Throughput due to:

- Lower compute intensity

- Efficient transformer inference

- Lean architecture focused on real‑world production needs

Mini’s design is ideal for environments where scalability and speed are paramount without compromising reasonable accuracy.

Grok-3.5 Performance

Grok-3.5, on the other hand, showcases elevated performance on:

- Complex math and logic

- Deep document semantic understanding

- Multimodal interpretation (images + text)

- Long‑chain reasoning challenges

- Persistent memory‑enabled tasks

Its strong performance on research‑grade benchmarks makes it more suitable for situations requiring intellectual rigor and nuanced interpretation.

Overall:

- Mini is faster and more cost‑effective

- 3.5 is deeper and more capable for complex cognition

Real-World Use Cases

Here’s how real‑world applications align with each model:

| Use Case | Recommended Model |

| Live Chatbots | Grok‑3 Mini |

| Coding Assistance Tools | Grok‑3 Mini |

| Enterprise AI Agents | Grok‑3.5 |

| Research & Academia | Grok‑3.5 |

| Vision‑Driven Apps | Grok‑3.5 |

| Budget‑Sensitive Apps | Grok‑3 Mini |

| High‑Volume API Systems | Grok‑3 Mini |

For Startups

Startups often prioritize:

- Lower operating expenses

- Rapid iteration

- Scalable performance

Grok‑3 Mini typically yields the best return on investment.

For Enterprises

Enterprises require:

- High reasoning accuracy

- Multimodal capacity

- Persistent memory

- Integration with enterprise workflows

Grok‑3.5 is often the appropriate choice for enterprise‑level AI deployments.

Pricing & Cost Efficiency

While exact pricing varies by API provider, subscription tier, and usage pattern, the broad trends in 2026 are:

| Cost Factor | Grok‑3 Mini | Grok‑3.5 |

| API Consumption Cost | Lower | Higher |

| Compute Usage | Optimized | Intensive |

| Enterprise Licensing | Moderate | Premium |

| Startup ROI | High | Moderate |

If you are:

- A startup → Grok‑3 Mini delivers better ROI

- A research lab → Grok‑3.5 delivers unparalleled insight

- An enterprise AI architect → Grok‑3.5 offers depth and expandability

- A cost‑conscious developer → Grok‑3 Mini is the practical choice

Pros & Cons

Grok-3 Mini

Pros

- Extremely low latency

- Cost‑effective and efficient

- Very strong code and data reasoning

- Scales easily in API systems

- Well‑suited for high‑throughput chat environments

Cons

- Less contextual depth than 3.5

- Limited multimodal support

- No persistent memory

- Not ideal for research‑level cognition

Grok-3.5

Pros

- Stronger reasoning and deeper semantic understanding

- Better benchmark performance

- Powerful multimodal processing

- Persistent memory (where available)

- Ideal for knowledgeable AI assistants

Cons

- Higher cost

- Slightly slower inference speeds

- Overkill for simple use cases

Deep Feature Breakdown

Let’s analyze major components in greater depth.

Context Window (~1M Tokens Explained)

Both models support an approximate 1 million token context span, enabling them to process:

- Full books

- Entire code repositories

- Legal contracts

- Extensive research papers

However, Grok‑3.5 manages long reasoning chains more gracefully due to superior attention optimization.

Multimodal Capability

Grok‑3 Mini:

- Mainly text‑centric

- Basic token understanding

Grok‑3.5:

- Advanced image interpretation

- Better visual‑text reasoning

- Possible groundwork for future speech and video modalities

If your application requires vision‑powered intelligence, 3.5 is significantly more adept.

Persistent Memory

Persistent Memory allows the model to remember past interactions across sessions, enabling:

- Personalized assistants

- Long‑term research tracking

- Continuity in conversational agents

- Grok‑3 Mini → Not supported

- Grok‑3.5 → Supported (in select tiers)

This is crucial for agents designed to behave like human collaborators.

Grok Model Evolution – Strategic Positioning

To comprehend the macro picture:

- Early Grok focused on real‑time data + personality

- Grok‑3 expanded reasoning and context

- Grok‑3 Mini optimized performance and throughput

- Grok‑3.5 deepened intelligence and multimodal understanding

This split embodies a classic AI product strategy:

Efficiency Branch vs Intelligence Branch

Each branch serves a fundamentally different class of applications.

Grok-3 Mini vs Grok‑3.5 – Final Verdict

Choose Grok‑3 Mini if:

- Fast responses are critical

- You have heavy API traffic

- Cost efficiency is a priority

- You are building chatbots, helpers, or coding tools

- You don’t need persistent memory or advanced multimodal reasoning

Choose Grok‑3.5 if:

- Deep reasoning matters

- You develop enterprise AI agents

- You need multimodal and visual understanding

- Persistent memory across sessions is key

- You are conducting research or building high‑intelligence systems

There is no universal “best” model; it depends on your specific goals and constraints.

FAQs

A: Grok‑3.5 has been rolled out progressively, with staged previews and limited tier access. Availability may vary by subscription and API plan.

A: In reasoning depth and multimodal competence. If your priority is speed and budget efficiency.

A: For rapid code generation at scale → Grok‑3 Mini. For sophisticated architectural coding tasks → Grok‑3.5.

A: Grok‑3 Mini is generally more affordable due to its lower compute requirements.

A: Only if they need advanced reasoning, multimodal processing, or persistent memory; otherwise, Mini is a superior ROI choice.

Conclusion

Choosing between Grok-3 Mini and Grok-3.5 ultimately depends on your objectives, resource constraints, and application needs. Both models are part of a powerful, evolving AI ecosystem, but they are optimized for different priorities:

- Grok-3 Mini excels in speed, cost-efficiency, and scalability, making it ideal for startups, high-volume APIs, and real-time conversational tools. It delivers strong performance in coding, STEM tasks, and general Q&A without requiring heavy computational resources.

- Grok-3.5 is designed for deep reasoning, multimodal intelligence, and long-context understanding. It shines in research, enterprise AI agents, complex workflow automation, and applications that benefit from Persistent memory. While it comes at a higher cost and slightly slower speed, the intellectual depth and versatility it offers are unmatched.