Introduction

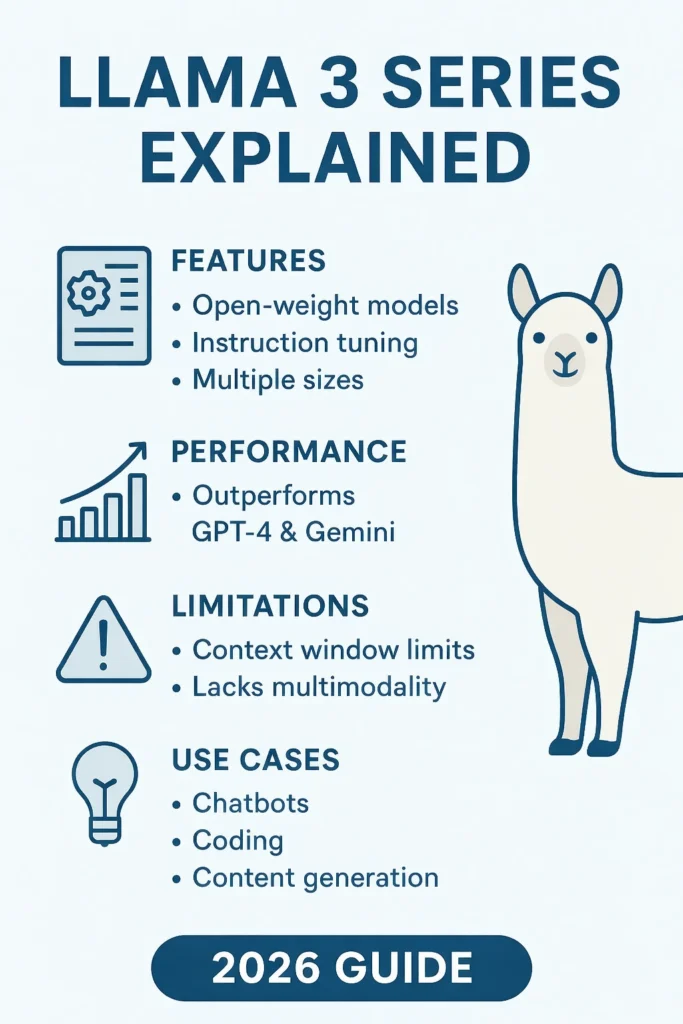

In the rapidly evolving world of AI and natural language processing (NLP), the Llama 3 Series has emerged as one of the most impactful open‑weight large language model (LLM) families available in 2026 for researchers, developers, and enterprises alike.

Unlike closed proprietary services where you pay per usage or rely on an external API, Llama 3 gives you significant autonomy — including the ability to download and self‑host model weights, integrate into internal systems, and customize behavior based on your specific needs.

This extensive guide gives clear, jargon‑friendly explanations of:

What the Llama 3 model family is

Why it matters in the AI landscape of 2026

How its benchmark performance stacks up

Its advantages and real constraints

Practical business and developer use cases

How to deploy, optimize, and fine‑tune Llama 3 for real systems

Llama 3 isn’t just “another AI model” — it represents one of the most sophisticated open‑source LLM ecosystems available today.

What Is the Llama 3 Series?

The Llama 3 Series is Meta’s third generation of open‑weight transformer‑based large language models, designed with a strong emphasis on performance, adaptability, and real‑world use. Unlike closed systems that restrict access, Llama 3’s core philosophy is to give users transparency and control over their AI workloads.

At its heart, Llama 3 is part of a broader trend where organizations release powerful models with accessible weights and permissive licenses — enabling developers to run them locally, privately, or in cloud infrastructures without per‑call billing. GeeksforGeeks

- Open‑weight availability — No proprietary API lock‑in

- Instruction tuning — For better task compliance

- Multiple model sizes — From compact to research/enterprise‑grade

- Longer context capabilities in advanced variants

- Support for rich NLP and coding workflows

Why Llama 3 Matters in 2026

True Open AI Access

Llama 3 makes powerful NLP reachable to individuals, universities, startups, and corporations without mandatory API costs or vendor lock‑in.

Great Cost Efficiency

Instead of paying per token or per API call, you can run Llama 3 using local hardware or cloud instances you control — drastically reducing operating costs for high‑volume use cases.

Flexible Deployment

Llama 3 supports self‑hosting, edge deployment, and integration into custom applications without licensing barriers in many cases. This flexibility is a game-changer for privacy‑centric workflows and organizations with data governance requirements.

Strong Adoption & Ecosystem

A growing body of tools and community contributions — from optimized runtimes to fine‑tuning utilities — enhances the model’s usefulness.

Core Versions of the Llama 3 Series

| Model Variant | Parameter Count | Context Window | Typical Uses |

| Llama 3‑8B | ~8 B parameters | ~8 192 tokens | Lightweight NLP tasks and local use |

| Llama 3‑70B | ~70 B parameters | ~8 192 tokens | Advanced reasoning and content generation |

| Llama 3.1 / 3.2 / 3.3 (up to 405B) | up to 405 B parameters | Up to 128 000 tokens | Extensive documents, complex workflows, research‑level NLP |

Understanding the Context Window

A context window is the amount of text the model can process in a single prompt or interaction. A larger context window means the model can keep better track of long conversations, legal documents, entire books, or large structured code bases without losing context. Advanced Llama 3 variants support very large windows — up to 128 000 tokens — opening up new possibilities for long‑form NLP tasks. lhncbc.nlm.nih.gov

Deep Dive: Llama 3 Features That Matter

Massive and Diverse Training Data

Llama 3 models are trained on an enormous dataset comprised of text, code, and structured knowledge — reportedly trillions of tokens of data sourced from publicly available text. This volume and variety allow the models to learn rich linguistic patterns and reasoning skills. Ars Technica

- Better understanding of nuanced language

- Stronger reasoning over varied domains

- Better handling of specialized content like code

Instruction‑Tuned Variants

Llama 3’s instruction‑tuned models have been refined beyond raw next‑token prediction. Instead, they are trained to follow human instructions — making them more useful in real applications like chatbots, task execution, and information extraction.

- More coherent conversation outputs

- Better compliance with tasks

- Improved performance on directive prompts

Context Expansion in Advanced Models

Originally, Llama 3 models supported about 8 000 tokens of context — sufficient for many typical use cases. But newer sub‑versions (3.1/3.2/3.3) support context lengths up to 128 000 tokens. lhncbc.nlm.nih.gov

What does this mean?

You can feed the model extremely long documents — like books, manuals, or multi‑file code projects — and expect the model to retain context across the whole input.

- Contract analysis

- Legal summarization

- Long‑form content generation

- Multi‑agent reasoning workflows

Tokenizer and Multilingual Support

Llama 3 uses a tokenizer with a vocabulary size of ~128K tokens, enabling more efficient encoding of global languages and rarer words. This is particularly helpful for non‑English languages or domains with large vocabularies.

Better handling of global text

Improved accuracy on languages with diverse vocabularies

Reduced token fragmentation

Benchmarks & Performance

| Benchmark | Llama 3‑8B | Llama 2‑13B | Llama 3‑70B | Llama 2‑70B |

| MMLU (5‑shot) | 66.6 | 53.8 | 79.5 | 69.7 |

| ARC‑Challenge (25‑shot) | 78.6 | 67.6 | 93.0 | 85.3 |

| HumanEval (Code) | 62.2 | 14.0 | 81.7 | 25.6 |

They show that the Llama 3 family, especially the 70B variant, consistently outperforms earlier open‑models — particularly in reasoning, comprehension, and Coding tasks.

Real Limitations You MUST Address

Hallucination & Bias

Like all large language models, Llama 3 can hallucinate — confidently outputting information that sounds correct but is false. This can pose significant issues in sensitive or safety‑critical applications.

Mitigations:

Human evaluation pipelines

Validation layers

Prompt engineering safeguards

Context Window Limits in Base Models

The original Llama 3 models with ~8 000 token context windows are sufficient for many tasks, but not for processing entire books or very large structured data in a single prompt. If you need extremely long context handling, you’ll want the expanded variants with up to ~128 000 token windows. lhncbc.nlm.nih.gov

Multimodal Capabilities Are Not Universal

While some advanced variants and tooling integrations provide multimodal inputs (like images), the primary text‑only Llama 3 models don’t natively process images, video, or audio without connected systems — unlike some competitors with built‑in multimodal support.

Computational and Fine‑Tuning Costs

- Substantial GPU/TPU resources

- Technical expertise in model training

- Careful validation workflows

Llama 3 vs the AI Competition

| Feature | Llama 3 | GPT‑4 / GPT‑4o | Gemini | Claude |

| Open Source | ✔️ | ❌ | ❌ | ❌ |

| Max Context | Up to 128K | ~128K+ | ~128K+ | ~200K+ |

| Fine‑Tuning Control | ✔️ | Partial | ❌ | ❌ |

| Built‑in Multimodal | Partial/Tool‑based | Excellent | Excellent | Excellent |

| Cost | Free / Self‑hosted | Paid | Paid | Paid |

Key Takeaways:

✔ Llama 3 is ideal where control and open access matter.

✔ Closed systems like GPT‑4o, Gemini, and Claude generally excel at built‑in safety and multimodal workflows.

✔ For long‑context heavy tasks or advanced multimodal use, proprietary systems still hold strengths.

Pros & Cons

Pros

- Truly open regarding model weights

- Strong reasoning & NLP performance

- Highly customizable via self‑hosting

- Cost‑effective over large workloads

Cons

- Base models have limited context windows

- Lacks built‑in multimodality (text only)

- Fine‑tuning demands expertise and hardware

- Safety guardrails are developer‑managed

Real World Use Cases

Enterprise Assistants & Knowledge Tools

Answer employee questions

Summarize company documents

Provide workflow guidance.

Coding Assistance and Dev Tools

Auto‑completion systems

Bug explainers

Code analytics assistants

Local & Offline Inference

Privacy‑first workflows

No dependency on external APIs

Offline inference for secure environments

Safety, Compliance & Responsible Use

Data Privacy

Never expose sensitive data without proper safeguards.

Bias & Harm Testing

Regularly audit for bias, harmful stereotypes, and misinformation — especially in customer‑facing applications.

Regulatory Compliance

✔ GDPR

✔ CCPA

FAQs

A: The main weights are openly downloadable, though Meta’s terms of use still apply.

A: On many benchmarks — especially reasoning and code — Llama 3 holds up strongly, though proprietary models often still lead in multimodality and safety tooling

8B: Local, lightweight tasks

70B: Complex applications

128K variants: Long‑form tasks

A: The core lineup focuses on text; multimodal workflows require additional tooling.

Conclusion

The Llama 3 Series stands as a monumental Development in open‑source AI in 2026, offering:

Competitive NLP performance

Transparent access to powerful models

Deployment flexibility (local & cloud)

While constraints — like limited multimodal support and substantial fine‑tuning costs — remain, its open nature makes Llama 3 highly attractive for academic, enterprise, research, and privacy‑centric AI systems.