Introduction

Artificial intelligence is immature at a pace that few industries have ever witnessed. By 2026, AI systems will no longer be limited to producing fluent paragraphs or like isolated factual queries. Modern large language painting now interprets images, analyzes massive knowledge archives, reasons across confused data formats, and operates reliably at an enterprise level.

At the heart of this shift stands Llama 4 Maverick, Meta’s leading multimodal AI system to date. Designed to move beyond common text‑only intelligence, Maverick speaks for a shift toward scalable, efficient, and customizable AI grounded in modern NLP and multimodal learning principles.

Unlike earlier dense transformer models that activate every parameter for each inference request, Llama 4 Maverick uses a Mixture‑of‑Experts (MoE) architecture. This approach enables selective parameter activation, improved computational efficiency, and superior scalability without linear cost growth. As a result, Maverick delivers high‑level reasoning performance while remaining practical for large‑scale deployments.

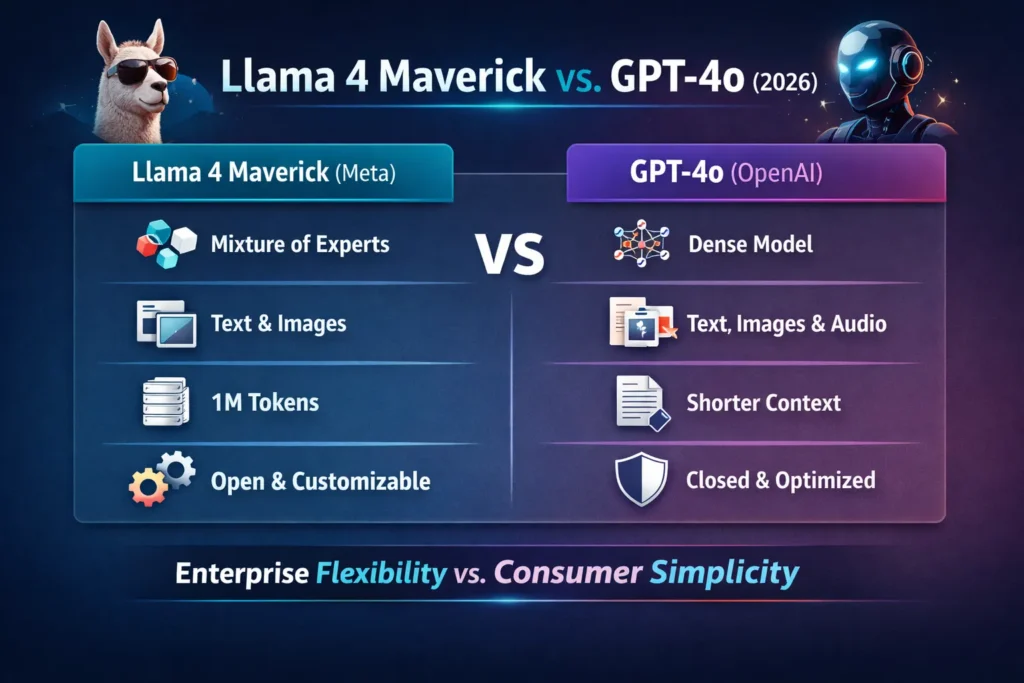

While closed models such as GPT‑4o dominate consumer‑facing applications, Llama 4 Maverick embodies Meta’s long‑term strategy: an open‑leaning, enterprise‑ready AI ecosystem that prioritizes flexibility, control, and deep contextual reasoning.

In this comprehensive guide, you will learn:

- What Llama 4 Maverick is and how it works

- Its multimodal and Mixture‑of‑Experts architecture is explained in simple NLP terms

- Performance benchmarks and real‑world behavior

- A detailed comparison with GPT‑4o

What Is Llama 4 Maverick?

Llama 4 Maverick is Meta’s flagship multimodal large language model released under the Llama 4 Community License. It is engineered for advanced natural language understanding, multimodal reasoning, software development, visual interpretation, and enterprise‑grade AI workflows.

From an NLP perspective, Maverick integrates transformer‑based sequence modeling with expert routing mechanisms and early fusion multimodal embeddings. This enables the model to reason across text and images while maintaining contextual coherence over extremely long sequences.

Unlike traditional dense models that engage all parameters during inference, Maverick selectively activates specialized subnetworks — or “experts” — depending on task requirements. This design significantly reduces computational overhead while preserving expressive capacity.

Key Model Overview

- Model Family: Llama 4

- Architecture: Mixture‑of‑Experts (MoE)

- Active Parameters: ~17B per inference

- Total Parameters: ~400B

- Modalities: Text + Image

- Context Window: Up to ~1 million tokens

- License: Llama 4 Community License

This architectural configuration allows Llama 4 Maverick to compete with closed proprietary systems while offering greater transparency, customization, and deployment control.

Why Llama 4 Maverick Matters in 2026

In earlier generations of AI, success was often equated with raw parameter count. Modern AI effectiveness depends on a combination of multimodal reasoning, efficiency, adaptability, and operational scalability.

Llama 4 Maverick addresses these requirements directly.

Key Reasons Maverick Is Important

- Supports massive context windows ranging from hundreds of thousands to nearly one million tokens

- Performs joint reasoning over textual and visual inputs

- Achieves higher efficiency through sparse expert activation

- Operates within a more open and customizable ecosystem than closed competitors

- Designed for enterprise, research, and infrastructure‑level deployment

From an NLP research standpoint, Maverick demonstrates how sparse architectures can outperform dense models in real‑world tasks without proportional increases in compute cost.

How Llama 4 Maverick Works

How MoE Works in Llama 4 Maverick

- The model contains approximately 128 expert networks

- For each inference request, only a subset of experts is activated

- Roughly ~17B parameters are used per task

- A learned routing mechanism determines expert selection based on:

- Input semantics

- Task complexity

- Modality

Why MoE Matters

- Faster inference latency

- Reduced computational expense

- Enhanced task specialization

- Scalability without linear cost escalation

This approach allows Maverick to deliver high‑quality reasoning while remaining resource‑efficient — a critical requirement for enterprise adoption.

Key Features of Llama 4 Maverick

Native Multimodal Capabilities

Llama 4 Maverick natively processes:

- Natural language text

- Visual inputs such as images, diagrams, and screenshots

- Joint text‑image reasoning Tasks

From an NLP standpoint, this capability is enabled by shared embedding spaces that align linguistic tokens with visual representations.

What This Enables

- Explaining charts, graphs, and technical diagrams

- Analyzing user interfaces from screenshots

- Generating context‑aware captions

- Performing visual question answering with high accuracy

Early Fusion Vision‑Language Design

Unlike models that append vision processing as a secondary module, Maverick uses early fusion.

What Is Early Fusion?

- Textual and visual embeddings are integrated at early transformer layers

- Cross‑modal attention occurs throughout the network

- Semantic alignment is stronger and more consistent

Benefits

- Reduced hallucinations

- Improved visual grounding

- More coherent multimodal explanations

Massive Context Window

With support for extremely long sequences, Maverick excels in:

- Long‑form document analysis

- Multi‑repository code comprehension

- Large‑scale research synthesis

- Enterprise knowledge graph reasoning

This capability significantly exceeds that of GPT‑4o in long‑context scenarios.

Open & Customizable Ecosystem

While not fully open source, Llama 4 Maverick offers:

- Greater architectural transparency

- Enterprise‑grade fine‑tuning options

- Flexible on‑premise and cloud deployments

This appeals strongly to organizations seeking ownership and control over their AI infrastructure.

Llama 4 Maverick Multimodal Capabilities

Multimodality is not merely an auxiliary feature — it is central to Maverick’s design philosophy.

What Llama 4 Maverick Can Do

- Interpret images using natural language queries

- Combine visuals with extensive textual context

- Generate structured, step‑by‑step explanations

- Perform reasoning across diagrams, documents, and datasets

Real-World Example

A user uploads a system architecture diagram and asks:

“Explain how this system works and suggest improvements.”

Maverick can:

- Identify visual components

- Align them with textual documentation

- Produce logical explanations

- Recommend optimizations based on design principles

Real-World Testing

Highlights

Although exact scores vary depending on configuration and deployment:

- Strong reasoning performance across NLP tasks

- High accuracy in multimodal understanding

- Competitive results compared to leading open models

- Slight variability relative to tightly optimized closed systems

Community Feedback

Users frequently report:

- Excellent long‑document comprehension

- Strong contextual retention

- Higher verbosity (adjustable via prompting)

- Performance sensitivity to tuning quality

Maverick rewards well-structured prompts and thoughtful fine-tuning more than consumer-oriented models.

Llama 4 Maverick vs GPT-4o

Feature Comparison

- Architecture: MoE vs Dense

- Modalities: Text + Image vs Text + Image + Audio

- Context Window: Up to ~1M tokens vs smaller optimized Conthttps://ultraai.site/ext

- Openness: Semi‑open vs closed

- Customization: High vs limited

- Cost Control: Flexible vs usage‑based

Pros & Cons

Pros

- Open‑leaning ecosystem

- Massive context handling

- Efficient sparse architecture

- Strong enterprise governance

Cons

- Requires technical expertise

- Less polished consumer UX

- Configuration complexity

GPT‑4o Pros

- Plug‑and‑play usability

- Highly optimized inference

- Consistent general reasoning

GPT‑4o Cons

- Closed platform

- Higher long‑term operational costs

- Limited customization

Use Cases for Llama 4 Maverick in 2026

Enterprise AI Assistants

- Internal knowledge retrieval

- Document intelligence

- Automated customer support

Research & Academia

- Multimodal literature analysis

- Long‑form research synthesis

- Data‑intensive reasoning

Software Development

- Large codebase understanding

- UI analysis from screenshots

- Cross‑file debugging assistance

Creative & Media Workflows

- Visual storytelling

- Design critique

- Content ideation

Pricing & Accessibility

Llama 4 Maverick is accessible via:

- Meta‑approved hosting partners

- Enterprise licensing programs

- Custom on‑premise deployments

Pricing depends on:

- Compute consumption

- Context length requirements

- Hosting infrastructure

- Fine‑tuning complexity

At scale, Maverick often delivers superior cost‑to‑control efficiency compared to closed alternatives.

Pros & Cons

Pros

- Advanced multimodal reasoning

- Efficient Mixture‑of‑Experts design

- Extremely large context window

- Flexible enterprise deployment

Cons

- Higher technical barrier to entry

- Benchmark variability

- Not designed for casual consumers

FAQs

A: It depends on the use case. Maverick excels in customization, openness, and long‑context reasoning, while GPT‑4o is superior for plug‑and‑play consumer applications.

A: It is released under the Llama 4 Community License, enabling broad usage with certain restrictions.

A: MoE architectures enable scalability without proportional increases in compute cost, improving efficiency and specialization.

A: It supports extremely large context windows, making it ideal for enterprise and research workloads.

Conclusion

Llama 4 Maverick performs a major milestone in open‑leaning multimodal AI. By integrating Mixture‑of‑Experts planning, massive contextual size, and native vision‑language reasoning, Meta has created an arrangement capable of competing with closed titan like GPT‑4o.

While it is not home for casual users, Maverick excels in firm, research, and custom AI deployments. For systems and developers who set up control, Scalability, and deep multimodal reasoning, it is absolutely worth using in 2026.