Introduction

Meta’s Llama ecosystem has transformed the artificial intelligence landscape at remarkable speed. When Llama 3 launched, it quickly became one of the most widely adopted open-weight transformer families for developers, researchers, startups, and enterprises. It delivered strong reasoning, coding, and instruction-following capabilities while remaining practical for deployment.

Then Meta introduced Llama 4 Scout, and the Conversation shifted dramatically.

Suddenly, the community was discussing:

- A 10-million token context window

- A Mixture-of-Experts (MoE) architecture

- Enhanced ultra-long sequence reasoning

- Enterprise-grade scalability and throughput optimization

On paper, it appears to be a monumental leap forward.

But here’s the real, practical question:

Is Llama 4 Scout genuinely superior to the Llama 3 series in real-world production systems?

Benchmarks appear impressive. Marketing claims are bold. But experienced engineers and AI architects care about something else entirely:

- Output consistency

- Infrastructure expenditure

- Deployment friction

- Fine-tuning robustness

- Long-term maintainability

- Latency under load

- Cost per inference

In this comprehensive 2026 deep-dive, we examine:

- Architectural philosophy (MoE vs dense transformers)

- Benchmark interpretation with context

- Context window reality vs promotional hype

- GPU requirements and hosting costs

- Deployment trade-offs

- Stability and inference predictability

- Practical use cases

- Honest strengths and weaknesses

- Common misconceptions

- Expert-level decision framework

If you’re evaluating Llama 4 Scout vs Llama 3.3 70B or other Llama 3 variants, this guide will help you make a strategic, evidence-based decision.

Why This Comparison Matters in 2026

The shift from Llama 3 to Llama 4 Scout is not incremental — it represents a fundamentally different design philosophy.

We are not discussing a minor parameter increase. We are examining:

- A different computational paradigm

- A radically expanded context window

- New scaling mechanics

- Altered memory utilization patterns

- Distinct cost structures

- Divergent performance trade-offs

This comparison is especially critical for:

- AI startups operating under budget constraints

- Enterprise ML teams deploying at scale

- Independent developers self-hosting models

- Researchers experimenting with long-context reasoning

- SaaS founders optimizing inference margins

- Infrastructure engineers are balancing throughput and cost

Choosing the wrong model can result in:

- Escalating cloud invoices

- Degraded latency

- Inconsistent outputs

- Operational instability

- Debugging complexity

- Infrastructure bottlenecks

Modern AI engineering is not about adopting the largest model available.

It is about selecting the model aligned with your workload characteristics.

Overview: What Are Llama 4 Scout & Llama 3 Models?

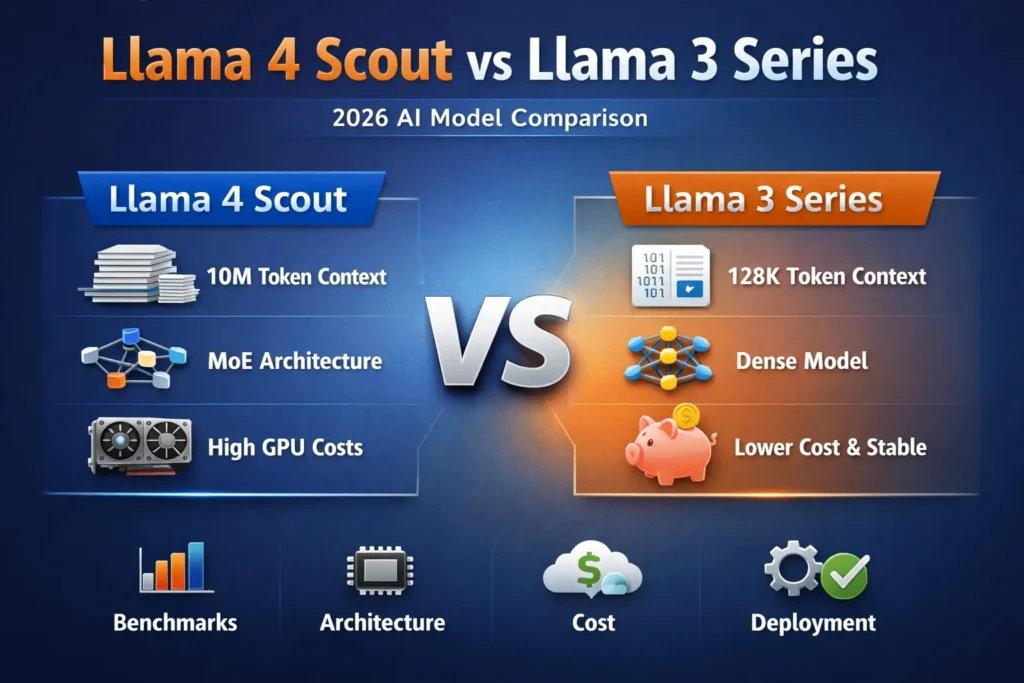

Llama 3 Series Overview

The Llama 3 family introduced high-performance dense transformer architectures across multiple arameter scales:

- 8B

- 70B

- 3.3 70B optimized refinement

Core Attributes of Llama 3

- Context window: approximately 128K tokens

- Dense transformer backbone

- Strong reasoning benchmarks

- Reliable coding capability

- Easier deployment configuration

- Stable inference behavior

- Mature tooling ecosystem

Llama 3 quickly became favored for:

- Coding copilots

- Retrieval-Augmented Generation (RAG) systems

- Fine-tuning workflows

- On-premise deployment

- AI SaaS backends

- Conversational agents

Its popularity emerged from a balanced blend of:

Performance + Stability + Accessibility + Cost-efficiency

Llama 4 Scout Overview

Llama 4 Scout represents a more experimental and ambitious direction.

Major innovations include:

- Mixture-of-Experts (MoE) transformer design

- Up to 10 million token context capacity

- Improved long-sequence reasoning

- Enhanced scaling efficiency

Scout was engineered for:

- Massive document ingestion

- Enterprise knowledge repositories

- Legal and research archives

- Multi-document synthesis

- Long-memory AI workflows

- Advanced research copilots

However, architectural sophistication introduces operational complexity.

Architecture Differences: MoE vs Dense Models

Understanding architecture is essential because it determines:

- Compute utilization

- Inference stability

- Fine-tuning flexibility

- Debugging complexity

- Latency patterns

- Hardware requirements

- Memory allocation

Llama 3: Dense Transformer Architecture

Dense transformer models activate all parameters for every token processed.

Advantages of Dense Models

- Predictable computation pathways

- Deterministic inference behavior

- Stable output generation

- Simplified fine-tuning

- Easier diagnostics

- Reduced routing variance

Dense architectures are conceptually straightforward. Every forward pass engages the full parameter space.

Disadvantages

- Higher compute per token

- Less scaling efficiency at extreme sizes

- Larger energy footprint

However, simplicity often translates into production reliability.

Llama 4 Scout: Mixture-of-Experts (MoE)

MoE models activate only a subset of parameters for each token.

Instead of utilizing the entire network, tokens are dynamically routed to specialized “experts.”

Advantages of MoE

- Improved parameter efficiency

- Scalable architecture

- Reduced active compute per token

- Potentially higher performance-to-compute ratio

Disadvantages

- Complex routing mechanisms

- Increased debugging difficulty

- Potential output variability

- Infrastructure sophistication

- Expert load imbalance issues

While MoE can outperform dense models in controlled benchmarks, real-world coding and reasoning tasks sometimes reveal variability.

Architecture directly impacts:

- Inference latency

- Throughput consistency

- GPU allocation

- Fine-tuning complexity

- Deployment architecture

Head-to-Head Benchmark Comparison

Benchmarks are useful indicators, but they must be interpreted cautiously.

Below is a contextualized comparison:

| Metric | Llama 4 Scout | Llama 3.3 70B |

| Context Window | 10M tokens | 128K tokens |

| Architecture | MoE | Dense |

| Long-Context Reasoning | Exceptional | Moderate |

| Coding Stability | Mixed reports | Consistent |

| Hardware Demand | Extremely high | Manageable |

| Fine-Tuning Simplicity | Complex | Easier |

| Deployment Complexity | High | Moderate |

| Ecosystem Maturity | Emerging | Mature |

Real-World Performance vs Benchmarks

Common benchmark suites include:

- MMLU

- GSM8K

- HumanEval

- ARC

- TruthfulQA

These evaluations measure structured reasoning under controlled conditions.

However, benchmarks rarely capture:

- Production latency under concurrent load

- Memory scaling costs

- Infrastructure bottlenecks

- Real-user prompt variability

- Long-tail error patterns

- Stability across extended sessions

Where Llama 4 Scout Excels

- Ultra-long document synthesis

- Enterprise archival systems

- Academic research workflows

- Massive codebase analysis

- Knowledge graph construction

Where Llama 3 Maintains Advantage

- Coding assistants

- Stable SaaS backends

- Cost-sensitive deployments

- Local hosting environments

- Small-team infrastructure setups

In practice, many developers prioritize:

Consistency > Benchmark dominance

Context Window Reality Check: What 10 Million Tokens Actually Means

The 10M token context window is the most promoted feature of Scout.

10 million tokens approximate:

- Thousands of pages of legal text

- Entire software repositories

- Extensive academic archives

- Multi-year communication logs

Impressive but expensive.

Practical Limitations

Extremely long context introduces:

- Substantial VRAM consumption

- Increased inference latency

- Higher cost per request

- Complex optimization requirements

- Larger attention computation overhead

Most real-world applications require:

- 8K–32K tokens

- Occasional RAG augmentation

- Structured memory compression

For many startups, 128K tokens is already more than sufficient.

Ultra-long context is powerful but specialized.

Hardware & Deployment Costs

This is where many articles lack depth.

Long-context MoE models dramatically increase memory and Compute demands.

GPU Requirements Comparison

| Deployment Factor | Llama 4 Scout | Llama 3.3 70B |

| Recommended GPUs | Multi-node cluster | Fewer GPUs |

| VRAM Needs | Extremely high | Manageable |

| Cloud Cost | Significant | Moderate |

| Local Hosting | Impractical for most | Feasible |

Critical Insight

If self-hosting:

Llama 3 is considerably easier to deploy and maintain.

Scout may require:

- Enterprise GPU clusters

- Advanced distributed training systems

- Optimized memory partitioning

- Sophisticated load balancing

Longer context means:

More tokens processed →

Greater compute demand →

Higher inference expenditure

For startups, marginal benchmark gains rarely justify the exponential costs of infrastructure.

Cost Per Million Tokens

While provider pricing varies, conceptually:

- Larger context windows increase per-request computation

- MoE routing introduces overhead

- Long-memory attention amplifies cost

In AI SaaS economics:

Lower inference cost = higher profit margin

Best Use Cases for Each Model

Choose Llama 4 Scout If:

- You process multi-million token archives

- You manage enterprise-scale document systems

- You operate high-performance GPU clusters

- Long-context reasoning is essential

- You build AI research platforms

Choose Llama 3 If:

- You develop coding assistants

- You require stable outputs

- You fine-tune models frequently

- You deploy locally

- You operate under cost constraints

- You prioritize predictable inference

For most startups, Llama 3 remains the pragmatic choice.

Pros & Cons

Pros

- Massive context capacity

- Strong long-sequence reasoning

- Scalable MoE efficiency

- Enterprise-oriented

Cons

- Expensive infrastructure

- Complex deployment

- Routing variability

- Overkill for typical applications

Llama 3 Series Pros

- Stable inference

- Strong coding consistency

- Easier fine-tuning

- Lower hardware barrier

- Mature ecosystem

Llama 3 Series Cons

- Smaller context window

- Less extreme scaling capacity

- Limited ultra-long memory

Step-by-Step Decision Framework

Define Context Requirements

Under 128K → Llama 3

Multi-million tokens → Scout

Assess Infrastructure

Limited GPU budget → Llama 3

Enterprise cluster → Scout

Evaluate Stability Needs

Mission-critical reliability → Llama 3

Research experimentation → Scout

Analyze Budget Constraints

Cost-sensitive → Llama 3

Enterprise-funded → Scout

Common Myths & Misconceptions

Llama 4 Scout Always Outperforms Llama 3

Performance depends on workload characteristics.

Bigger Context Means Better AI

Unused context adds cost, not intelligence.

Benchmarks Tell the Full Story

Real-world stability matters more.

FAQs

A: Not always. Many developers report that Llama 3 provides more consistent coding output.

A: Main differences are architecture (MoE vs dense), context window (10M vs 128K), and hardware requirements.

A: Only if you process extremely large documents or enterprise-scale archives.

A: It generally requires fewer resources and lower inference cost.

A: Most startups benefit more from Llama 3 due to stability and cost efficiency.

Conclusion

In 2026, Llama 4 Scout vs Llama 3 Series is more than a routine upgrade — it reflects a strategic evolution in model design. Llama 4 Scout introduces a Mixture-of-Experts (MoE) approach focused on scalability, efficiency, and handling ultra-long contexts, making it appealing for large-scale AI deployments and advanced workloads.

On the other hand, the Llama 3 Series continues to offer dependable, dense architecture, simpler deployment, and predictable performance across standard enterprise and development environments. It remains a strong choice for teams Prioritizing stability and easier infrastructure management.